Geoscience and Remote Sensing

The EuroSAT-SAR dataset is a SAR version of the EuroSAT dataset. We matched each Sentinel-2 image in EuroSAT with one Sentinel-1 patch according to the geospatial coordinates, ending up with 27,000 dual-pol Sentinel-1 SAR images divided in 10 classes. The EuroSAT-SAR dataset was collected as one downstream task in the work FG-MAE to serve as a CIFAR-like, clean, balanced ML-ready dataset for remote sensing SAR image recognition.

- Categories:

169 Views

169 Views

Optical remote sensing images, with their high spatial resolution and wide coverage, have emerged as invaluable tools for landslide analysis. Visual interpretation and manual delimitation of landslide areas in optical remote sensing images by human is labor intensive and inefficient. Automatic delimitation of landslide areas empowered by deep learning methods has drawn tremendous attention in recent years. Mask R-CNN and U-Net are the two most popular deep learning frameworks for image segmentation in computer vision.

- Categories:

202 Views

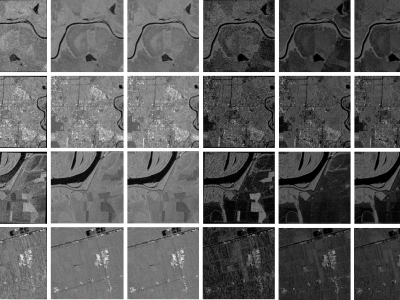

202 ViewsWhen training supervised deep learning models for despeckling SAR images, it is necessary to have a labeled dataset with pairs of images to be able to assess the quality of the filtering process. These pairs of images must be noisy and ground truth. The noisy images contain the speckle generated during the backscatter of the microwave signal, while the ground truth is generated through multitemporal fusion operations. In this paper, two operations are performed: mean and median.

- Categories:

745 Views

745 ViewsThe dataset contains the ground-based observations of crop growth stages for Canada's prairie provinces (Manitoba, Saskatchewan and Alberta) from 2019 to 2020. Crop growth stages were visually observed from the side of the fields on a weekly cycle until the fields were harvested. The BBCH (Biologische Bundesanstalt, Bundessortenamt und CHemische Industrie) scale was used to stage growth.

- Categories:

592 Views

592 ViewsThe dataset provides crop-type surveys for Canada's prairie provinces (Manitoba, Saskatchewan and Alberta) in 2020 and 2021. The data were collected via windshield survey(driving through the countryside with GPS-enabled data collection software and satellite imagery). Crop-type points and their geographic coordinates on the ground were gathered using data collection software. Field boundaries were identified on satellite imagery. A single observation point is dropped in a homogeneous area within the field.

- Categories:

335 Views

335 Views

The study focused on two regions in Rupnagar district, India, with an area of 216 km² as shown in Fig. 1a, using satellite data from June to November 2023. The upper region predominantly features paddy and maize, while the lower region includes paddy and sugarcane. Satellite images were obtained from PlanetScope’s 130-satellite constellation, with a spatial resolution of 3 meter. A total of 32 images, captured between late May and mid-November 2023, were used, all with less than 15% cloud cover.

- Categories:

196 Views

196 Views

The dataset has undergone format conversion based on URPC2021_Sonar_images_data, enabling it to be trained by YOLO and RT-DETR models.

The folder 'images' contains image files

The folder 'labels' contains TXT format annotation files.

The annotation file in the folder annotations is in XML format

Data.yaml is the configuration file for YOLO training

Data_deTR is the configuration file for RT-DETR and US-DETR training

- Categories:

150 Views

150 Views

The LunarRock3D dataset is a novel contribution to the field of lunar geology and automated exploration, offering a rich collection of high-quality 3D lunar rock models. This dataset has been meticulously crafted to overcome the dearth of comprehensive 3D models of lunar rocks, thereby filling a critical gap in existing lunar surface datasets.Comprising a diverse array of rock types encountered in multiple lunar exploration missions, the LunarRock3D dataset provides detailed geometric and textural attributes of lunar rocks.

- Categories:

298 Views

298 Views

The dataset provides detailed information for wheat crop monitoring in the Karnal District, India, spanning the period from 2010 to 2022. It is divided into four main components. The first component, Remote Sensing Data, includes Sentinel-2 (10 m resolution) satellite data averaged over village boundaries, specifically over a wheat crop mask. This folder contains two Excel files: one for NDVI (Normalized Difference Vegetation Index) and another for NDWI (Normalized Difference Water Index), both providing fortnightly data during the Rabi season across a 10-year period.

- Categories:

553 Views

553 Views