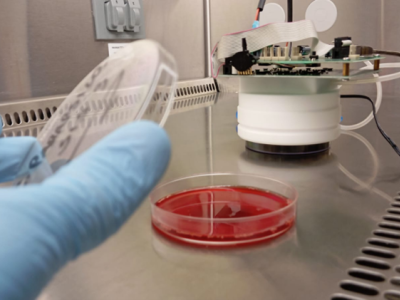

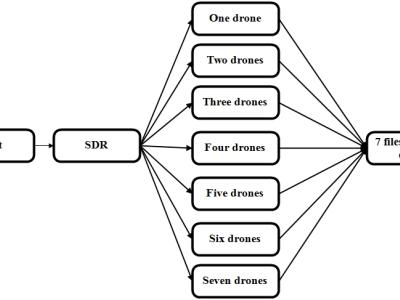

This dataset provides high-grade Received Signal Strength Indicator (RSSI) data collected from a set of experiments meant to estimate the number of drones present in a closed indoor space. The experiments are conducted varying the number of drones from one to seven, where all the variations in RSSI signal data are captured using a 5G transceiver setup established using Ettus E312 software-defined radio. There are seven files in the database, with a minimum of about 270 million samples.

- Categories: