Geoscience and Remote Sensing

In this research, a small-scale drone with the required magnetometer sensor technologies and novel intelligent automated techniques -- Maggy, was built to automate and ease the procedures of cleaning landmines and UXO/IDE. The dataset with an MP4-formated video demonstrates how a magnetometer-integrated autonomous drone can map the magnetic field of a landmine region effectively and efficiently using an intelligent application through near real-time data streaming.

- Categories:

152 Views

152 ViewsCARLA-AOD is a novel aerial object detection dataset created using the CARLA autonomous driving simulator. It comprises 2,160 images from 18 diverse urban and rural scenarios, featuring 4 vehicle categories, 24 viewpoints, and 5 scales. This dataset aims to support research in aerial object detection, offering comprehensive viewpoint coverage and rich environmental diversity to enhance model performance and generalization.

- Categories:

931 Views

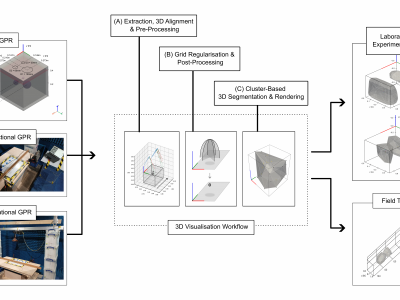

931 ViewsGround Penetrating Radar (GPR) facilitates the detection and localisation of subsurface structural anomalies in critical transport infrastructure (e.g. tunnels), better informing targeted maintenance strategies. However, conventional fixed-directional systems suffer from limited coverage - especially of less-accessible structural aspects (e.g. crowns) - alongside unclear visual output of anomaly spatial profiles, both for physical and simulated datasets.

- Categories:

472 Views

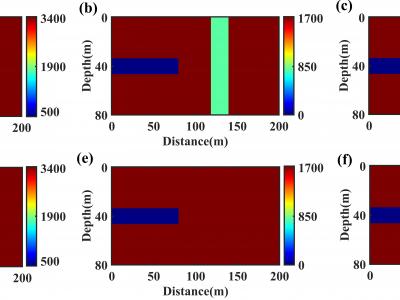

472 ViewsThis is a standard two-dimensional model of a tunnel, where the parameters of the tunnel cavity are set to the air medium. Accordingly, the P-wave velocity, S-wave velocity, and density are set to: 340m/s, 0m/s, and 120kg/m3, respectively. The model size is 80 grid points in the vertical direction and 200 grid points in the horizontal direction. The tunnel size is set with a vertical rectangular structure located 40m in front of the tunnel face (at 120m on the X-axis).

- Categories:

314 Views

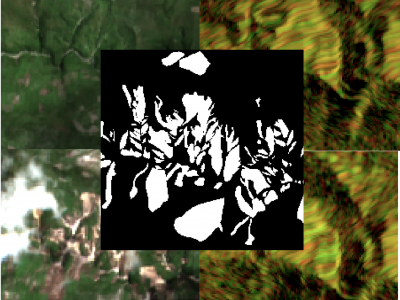

314 ViewsThe detection of the collapse of landslides trigerred by intense natural hazards, such as earthquakes and rainfall, allows rapid response to hazards which turned into disasters. The use of remote sensing imagery is mostly considered to cover wide areas and assess even more rapidly the threats. Yet, since optical images are sensitive to cloud coverage, their use is limited in case of emergency response. The proposed dataset is thus multimodal and targets the early detection of landslides following the disastrous earthquake which occurred in Haiti in 2021.

- Categories:

846 Views

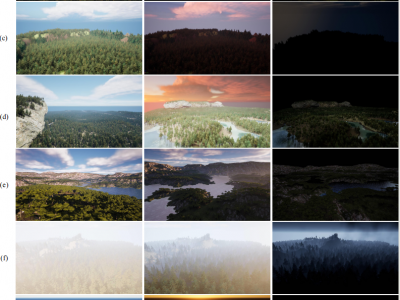

846 ViewsForest wildfires are one of the most catastrophic natural disasters, which poses a severe threat to both the ecosystem and human life. Therefore, it is imperative to implement technology to prevent and control forest wildfires. The combination of unmanned aerial vehicles (UAVs) and object detection algorithms provides a quick and accurate method to monitor large-scale forest areas.

- Categories:

1120 Views

1120 Views

The dataset aims to compile images of buildings with structural damage for analysis. The images can be classified by the severity of damage to building facades after seismic events using deep learning techniques, particularly pre-trained convolutional neural networks and transfer learning. The analysis can precisely identify structural damage levels, aiding in effective evaluation and response strategies.

- Categories:

268 Views

268 Views

The compressed files to be uploaded include three types: tif, csv, and py files.The compressed files to be uploaded include three types: tif, csv, and py files. These documents are directly related to our article.The following is a brief overview of the following files:

tif: 4160 training set images and 40 test set images.

csv: The AGB value corresponding to each image.

py:The code of data augmentation.

We hope that the documents we uploaded can make some contribution to the research of AGB estimation

- Categories:

70 Views

70 ViewsThe LuFI-RiverSnap dataset includes close-range river scene images obtained from various devices, such as UAVs, surveillance cameras, smartphones, and handheld cameras, with sizes up to 4624 × 3468 pixels. Several social media images, which are typically volunteered geographic information (VGI), have also been incorporated into the dataset to create more diverse river landscapes from various locations and sources.

Please see the following links:

- Categories:

621 Views

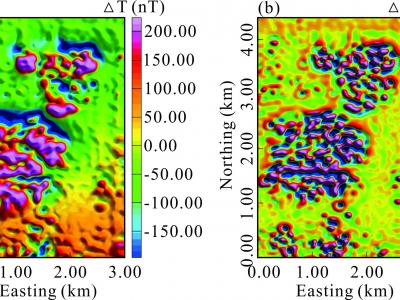

621 ViewsThe survey area is a partially burned coalfield at the border between the Loess Plateau and Mu Us Desert in Shaanxi Province, China, and most of the surface was covered by Quaternary sand and loess. There are burnt rocks in the coal seam in this area. We performed a surface survey of the total magnetic field and its gradient data in the area to obtain the range of burned zone. The inclination and declination of the geomagnetic field in the area were 59.0° and −5.4°, respectively. Figs. show the meshed total magnetic field and its gradient data with a grid distance of 50 m.

- Categories:

86 Views

86 Views