Computer Vision

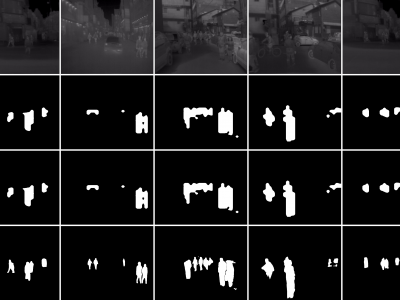

These are tight pedestrian masks for the thermal images present in the KAIST Multispectral pedestrian dataset, available at https://soonminhwang.github.io/rgbt-ped-detection/

Both the thermal images themselves as well as the original annotations are a part of the parent dataset. Using the annotation files provided by the authors, we develop the binary segmentation masks for the pedestrians, using the Segment Anything Model from Meta.

- Categories:

628 Views

628 Views

The Dataset is a large-scale, diverse collection of high-resolution RGB images containing labeled wheat heads. Assembled through a collaborative effort of nine research institutes from seven countries, the dataset encompasses a wide range of genotypes, growth stages, and pedoclimatic conditions. Its primary goal is to facilitate the development of robust and accurate wheat head detection models for applications in precision phenotyping and crop management.

- Categories:

198 Views

198 ViewsWe introduce two novel datasets for cell motility and wound healing research: the Wound Healing Assay Dataset (WHAD) and the Cell Adhesion and Motility Assay Dataset (CAMAD). WHAD comprises time-lapse phase-contrast images of wound healing assays using genetically modified MCF10A and MCF7 cells, while CAMAD includes MDA-MB-231 and RAW264.7 cells cultured on various substrates. These datasets offer diverse experimental conditions, comprehensive annotations, and high-quality imaging data, addressing gaps in existing resources.

- Categories:

964 Views

964 Views

Video anomaly detection (VAD) is a challenging task aiming to recognize anomalies in video frames, and existing large-scale VAD researches primarily focus on road traffic and human activity scenes. In industrial scenes, there are often a variety of unpredictable anomalies, and the VAD method can play a significant role in these scenarios. However, there is a lack of applicable datasets and methods specifically tailored for industrial production scenarios due to concerns regarding privacy and security.

- Categories:

315 Views

315 ViewsThe burgeoning demand for collaborative robotic systems to execute complex tasks collectively has intensified the research community's focus on advancing simultaneous localization and mapping (SLAM) in a cooperative context. Despite this interest, the scalability and diversity of existing datasets for collaborative trajectories remain limited, especially in scenarios with constrained perspectives where the generalization capabilities of Collaborative SLAM (C-SLAM) are critical for the feasibility of multi-agent missions.

- Categories:

383 Views

383 Views

Perception systems are vital for the safety of autonomous driving. In complex autonomous driving scenarios, autonomous vehicles must overcome various natural hazards, such as heavy rain or raindrops on the camera lens. Therefore, it is essential to conduct comprehensive testing of the perception systems in autonomous vehicles against these hazards, as demanded by regulatory agency of many countries for human drivers.

- Categories:

295 Views

295 ViewsForest wildfires are one of the most catastrophic natural disasters, which poses a severe threat to both the ecosystem and human life. Therefore, it is imperative to implement technology to prevent and control forest wildfires. The combination of unmanned aerial vehicles (UAVs) and object detection algorithms provides a quick and accurate method to monitor large-scale forest areas.

- Categories:

1365 Views

1365 ViewsWe are pleased to introduce the Qilin Watermelon Dataset, a unique collection of data aimed at investigating the relationship between a watermelon's appearance, tapping sound, and sweetness. This dataset is the result of our dedicated efforts to capture and record various aspects of Qilin watermelons, a special variety known for its exceptional taste and quality.

- Categories:

2228 Views

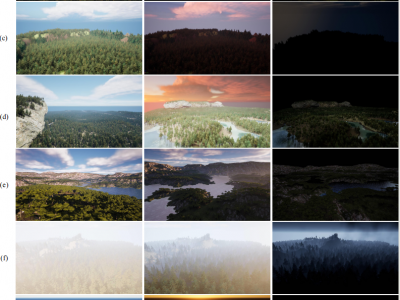

2228 ViewsAs with most AI methods, a 3D deep neural network needs to be trained to properly interpret its input data. More specifically, training a network for monocular 3D point cloud reconstruction requires a large set of recognized high-quality data which can be challenging to obtain. Hence, this dataset contains the image of a known object alongside its corresponding 3D point cloud representation. To collect a large number of categorized 3D objects, we use the ShapeNetCore (https://shapenet.org) dataset.

- Categories:

680 Views

680 ViewsThe advancement of machine and deep learning methods in traffic sign detection is critical for improving road safety and developing intelligent transportation systems. However, the scarcity of a comprehensive and publicly available dataset on Indian traffic has been a significant challenge for researchers in this field. To reduce this gap, we introduced the Indian Road Traffic Sign Detection dataset (IRTSD-Datasetv1), which captures real-world images across diverse conditions.

- Categories:

1774 Views

1774 Views