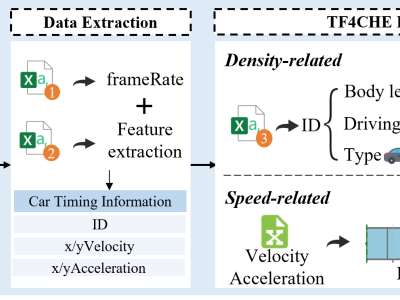

The Traffic Flow Dataset for China’s Congested Highways & Expressways (TF4CHE) is derived from AD4CHE (Aerial Dataset for China's Congested Highways & Expressways). AD4CHE collects data using unmanned aerial vehicles (UAVs) operating at an altitude of 100 meters and employs advanced calibration techniques to achieve a positioning accuracy of approximately 5 cm. It provides comprehensive vehicle metrics, including position, speed, classification, as well as unique parameters such as self-offset and yaw rate.

- Categories: