Communications

Owing to the substantial bandwidth they offer, the exploration of 100+ GHz frequencies for wireless communications has surged in recent years. These sub-Terahertz channels are susceptible to blockage, which makes reflected paths crucial for seamless connectivity. However, at such high frequencies, reflections deviate from the known mirror-like specular behavior as the signal wavelength becomes comparable to the height perturbation at the surface of the reflectors.

- Categories:

158 Views

158 ViewsAnomaly detection plays a crucial role in various domains, including but not limited to cybersecurity, space science, finance, and healthcare. However, the lack of standardized benchmark datasets hinders the comparative evaluation of anomaly detection algorithms. In this work, we address this gap by presenting a curated collection of preprocessed datasets for spacecraft anomalies sourced from multiple sources. These datasets cover a diverse range of anomalies and real-world scenarios for the spacecrafts.

- Categories:

835 Views

835 ViewsTo access this dataset without purchasing an IEEE Dataport subscription, please visit: https://zenodo.org/doi/10.5281/zenodo.11711229

Please cite the following paper when using this dataset:

- Categories:

1020 Views

1020 Views

These datasets are collected from the tests that were performed for decentralized synchronization among collaborative robots via 5G and Ethernet networks using with/without causal message ordering. These files have different names depending on the connection type and causality type. For example, 5G_with_causality.txt file stores the test results which were performed on a public 5G network using causal message ordering for different cobot groups like 5,10,20,30,40. The test results for each robot group are separated in each txt file.

- Categories:

30 Views

30 Views

Mobile phones are central to modern communication, yet for individuals with tremors, the precision required for touch-based interfaces is a significant hurdle. In pursuit of social equality and to empower those with tremors to interact more effectively with mobile technology, this study introduces an optical see-through augmented reality (AR) system equipped with a stabilized filter.

- Categories:

146 Views

146 Views

Mobile phones are central to modern communication, yet for individuals with tremors, the precision required for touch-based interfaces is a significant hurdle. In pursuit of social equality and to empower those with tremors to interact more effectively with mobile technology, this study introduces an optical see-through augmented reality (AR) system equipped with a stabilized filter.

- Categories:

70 Views

70 Views

These datasets are gathered from an array of six gas sensors to be used for the odor recognition system. The sensors those used to create the data set are; Df-NH3, MQ-136, MQ-135, MQ-8, MQ-4, and MQ-2.

odors of different 10 samples are taken from these six sensors

1- Natural Air

2- Fresh Onion

3- Fresh Garlic

4- Fresh Lemon

5- Tomato

6- Petrol

7- Gasoline

8- Coffee 1,2

9- Orange

10- Colonia Perfume

- Categories:

775 Views

775 Views

The capabilities of the millimeter wave (mmWave) spectrum to fulfill the ultra high data rate demands of V2X (Vehicle-to-Everything) communications necessitates the need for accurate channel modeling to facilitate the efficient development of next-generation network and device design strategies. Ergo, this work describes the design of a novel fully autonomous robotic beam-steering platform, equipped with a custom broadband sliding correlator channel sounder, for 28GHz V2X propagation modeling activities on the NSF POWDER experimental testbed.

- Categories:

301 Views

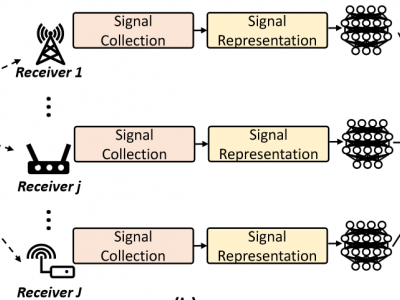

301 ViewsRadio frequency fingerprint identification (RFFI) is an emerging device authentication technique, which exploits the hardware characteristics of the RF front-end as device identifiers. The receiver hardware impairments interfere with the feature extraction of transmitter impairments, but their effect and mitigation have not been comprehensively studied. In this paper, we propose a receiver-agnostic RFFI system by employing adversarial training to learn the receiver-independent features.

- Categories:

750 Views

750 Views