Climate Change/Environmental

Amid global climate change, rising atmospheric methane (CH4) concentrations significantly influence the climate system, contributing to temperature increases and atmospheric chemistry changes. Accurate monitoring of these concentrations is essential to support global methane emission reduction goals, such as those outlined in the Global Methane Pledge targeting a 30% reduction by 2030. Satellite remote sensing, offering high precision and extensive spatial coverage, has become a critical tool for measuring large-scale atmospheric methane concentrations.

- Categories:

23 Views

23 Views

This dataset comprises 30 CSV files featuring text-based narratives developed as part of the MOVING (MOuntain Valorisation through INterconnectedness and Green Growth) Horizon 2020 project, which explores 454 value chains across 23 rural regions in 16 European countries. Additionally, it includes 30 JSON files that annotate the keywords within these narratives, linking them to their corresponding Wikidata entries and QIDs.

- Categories:

54 Views

54 Views

This dataset comprises 30 CSV files featuring text-based narratives developed as part of the MOVING (MOuntain Valorisation through INterconnectedness and Green Growth) Horizon 2020 project, which explores 454 value chains across 23 rural regions in 16 European countries. Additionally, it includes 30 JSON files that annotate the keywords within these narratives, linking them to their corresponding Wikidata entries and QIDs.

- Categories:

12 Views

12 Views

Ecological carrying capacity (ECC) is central to assessing the sustainability of ecosystems, aiming to quantify the limits of natural systems to support human activities while maintaining biodiversity and resource regeneration. To assess ECC, earlier studies typically used the analytic hierarchy process (AHP) method for modeling.

- Categories:

44 Views

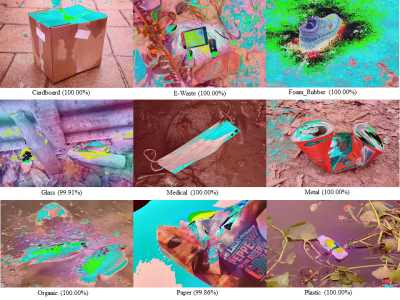

44 ViewsAn automatic waste classification system embedded with higher accuracy and precision of convolution neural network (CNN) model can significantly the reduce manual labor involved in recycling. The ConvNeXt architecture has gained remarkable improvements in image recognition. A larger dataset, called TrashNeXt, comprising 23,625 images across nine categories has been introduced in this study by combining and thoroughly analyzing various pre-existing datasets.

- Categories:

389 Views

389 Views

This dataset accompanies the IEEE IoT Journal paper titled "A Dual System IoT Strategy for Hyperlocal Spatial-Temporal Microclimate Monitoring in Urban Environments Using LoRa." It is intended for validating bespoke sensors against commercial sensors. The data were collected using two different types of sensors deployed at eight locations in East London, starting on August 1, 2023, and covering a period of one year.

- Categories:

56 Views

56 Views

CO2 Emissions Data Visualization Project – I Hug Trees

- Categories:

102 Views

102 Views

Monitoring glacier calving fronts is essential for understanding ice dynamics and their response to climate change. The present ice frontdatasets are limited to temporal resolution and precision, making it difficult to efficiently capture the rapid detailed changes in calving processes. In this study, we proposed a novel approach of calving front extraction derived from Sentinel-2 satellite images, integrated with samgeo and geemap modules based on Segment Anything Model (SAM) and Google Earth Engine (GEE) framework.

- Categories:

37 Views

37 Views