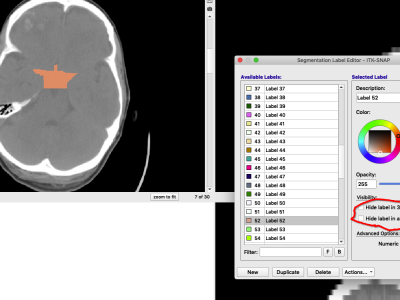

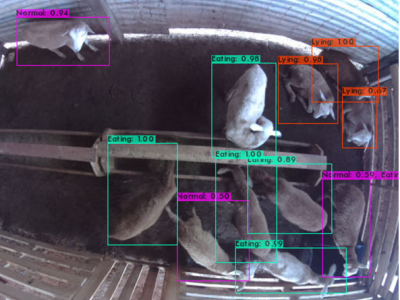

Semantic segmentation is the topic of interest among deep learning researchers in the recent era. It has many applications in different domains including, food recognition. In the case of food recognition, it removes the non-food background from the food portion. There is no large public food dataset available to train semantic segmentation models. We prepared a dataset named ’SEG-FOOD’[44] containing images of FOOD101, PFID, and Pakistani Food dataset and open-sourced the annotated dataset for future research. We annotated the images using JS Segment annotator.

- Categories: