Sensors

As essential load-bearing components, the reliability of cables directly influences the safety of engineering structures. Online monitoring of cable tensions' enhances structural reliability and enables engineers to regulate stresses, extending the operational lifespan of cable systems. This study proposes A half-type cable tension sensor (HCTS) based on triboelectric nanogenerators. The HCTS features an internal sensing unit comprising conductive fabrics and silicone films.

- Categories:

52 Views

52 ViewsThe SDUITC database is a multi-modal resourse developed at the Shandong Cooperative Vehicle-Infrastructure Test Base, which uses roadside cameras and LiDAR to monitor road targets and collect point cloud information. Following ground segmentation (target point cloud extraction), target identification and tracking, and feature extraction, the target point cloud information is refined and summarized into the following content: 1. Video snapshot of the captured target; 2. Point cloud clustering information for the target; 3. Feature tables.

- Categories:

117 Views

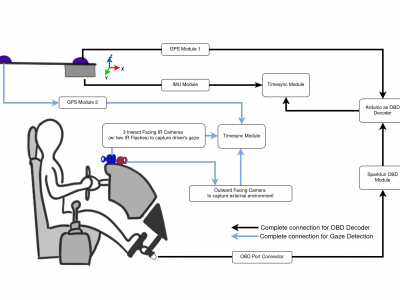

117 ViewsRepeated Route Naturalistic Driving Dataset (R2ND2) is a dual-perspective dataset for driver behavior analysis constituent of vehicular data collected using task-specific CAN decoding sensors using OBD port and external sensors, and (b) gaze-measurements collected using industry-standard multi-camera gaze calibration and collection system. Our experiment is designed to consider the variability associated with driving experience that depends on the time of day and provides valuable insights into the correlation of these additional metrics on driver behavior.

- Categories:

453 Views

453 Views

In this study, MMW data are collected using a commercial handheld scanner (Vayyar's ECS2000), focusing on localized scans of the human body. The collected data are complex-valued (CV) high-resolution local 3D pseudo-images over a volume of 13×13×10 cm with spatial resolutions of 1.6 mm, 1.6 mm, and 4.3 mm in the x, y, and z directions, respectively. The compact, portable ECS2000 Vayyar's MMW scanner is built around a single RF board working in the frequency range of [60.4-69.9] GHz, housing transmitting and receiving antennas in a multiple-input multiple-output (MIMO) setup.

- Categories:

153 Views

153 Views

The dataset used in paper Ke Ma et al. , A Dynamic Landslide Warning Model based on Grey System Theory.

- Categories:

78 Views

78 Views

Six flexible piezoelectric sensors were evenly attached around the patella of the knee joint to capture patellar motion signals during the knee flexion process. This dataset includes data from three distinct participant groups categorized by knee joint health status. The healthy group consists of 27 participants with normal knee joints (10 females). The subhealthy group includes 15 participants from Beihang University experiencing mild knee discomfort (8 females).

- Categories:

62 Views

62 Views

This ZIP file contains two distinct datasets collected over a 14-day period. The first dataset consists of real-world smart home data, providing detailed logs from six devices: a Plug Fan, Plug PC, Humidity Sensor, Presence Sensor, Light Bulb, and Window Opening Sensor. The data includes device interactions and environmental conditions such as temperature, humidity, and presence. The second dataset is generated by a smart home simulator for the same period, offering simulated device interactions and environmental variables.

- Categories:

245 Views

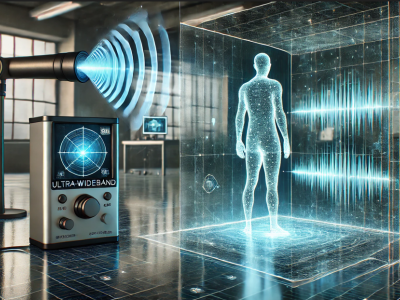

245 ViewsIn this dataset, a human detecting model using with UWB radar technology is presented. Two distinct datasets were created using the UWB radar device, leveraging its dual features. Data collection involved two main scenarios, each containing multiple sub-scenarios. These sub-scenarios varied parameters like the position, distance, angle, and orientation of the human subject relative to the radar. Unlike conventional approaches that rely on signal processing or noise/background removal, this study uniquely emphasizes analyzing raw UWB radar data directly.

- Categories:

599 Views

599 Views

The bottom anode in the Direct Current Electric Arc Furnace (DC EAF) is critical for completing the electrical circuit necessary for sustaining the arc within the furnace. For pin-type bottom anodes, monitoring of the temperature of select pins instrumented with thermocouples is performed to track bottom wear in the EAF and inform the operator when the furnace should be removed from service.

- Categories:

19 Views

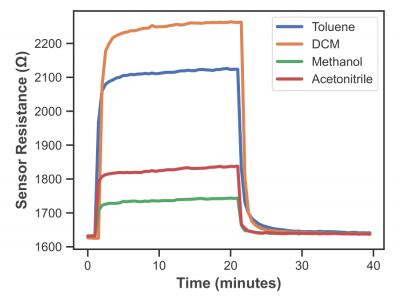

19 ViewsThis dataset contains 2,016 sensor responses collected from an array of conductive carbon-black polymer composite sensors, exposed to four target analytes—acetonitrile, dichloromethane (DCM), methanol, and toluene—at nine distinct concentration levels ranging from 0.5% to 20% P/P₀. Each sensor was exposed to the analytes for 20 minutes, followed by 20 minutes of nitrogen flushing to restore the baseline. The data consists of 80 time points (one every 30 seconds) per response, with each time point representing the sensor's resistance to a specific analyte concentration.

- Categories:

90 Views

90 Views