Sensors

The given data contains the results from laboratory trials related to the paper "Optimizing Congestion Management andEnhancing Resilience in Low-Voltage Grids Using OPF and MPC Control Algorithms Through Edge Computing and IEC 61850 Standards" currently in publication in IEEE Access.

- Categories:

229 Views

229 ViewsJamming devices present a significant threat by disrupting signals from the global navigation satellite system (GNSS), compromising the robustness of accurate positioning. The detection of anomalies within frequency snapshots is crucial to counteract these interferences effectively. A critical preliminary measure involves the reliable classification of interferences and characterization and localization of jamming devices.

- Categories:

254 Views

254 ViewsJamming devices pose a significant threat by disrupting signals from the global navigation satellite system (GNSS), compromising the robustness of accurate positioning. Detecting anomalies in frequency snapshots is crucial to counteract these interferences effectively. The ability to adapt to diverse, unseen interference characteristics is essential for ensuring the reliability of GNSS in real-world applications. We recorded a dataset with our own sensor station at a German highway with two interference classes and one non-interference class.

- Categories:

293 Views

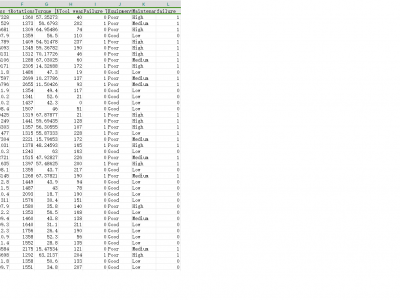

293 ViewsThe Machine Failure Predictions Dataset (D_2) is a real-world dataset sourced from Kaggle, containing 10,000 records and 14 features pertinent to IIoT device performance and health status. The binary target feature, 'failure', indicates whether a device is functioning (0) or has failed (1). Predictor variables include telemetry readings and categorical features related to device operation and environment. Data preprocessing included aggregating features related to failure types and removing non-informative features such as Product ID.

- Categories:

339 Views

339 Views

Since the discovery of Giant Magnetoresistance(GMR) , it has found many applications in sensors. One of these key areas is for biomedical sensing. GMR biosensors with large-area and low-aspect-ratio were demonstrated experimentally with ultra high sensitivity, followed by a relatively simplified theoretical explanation.

- Categories:

46 Views

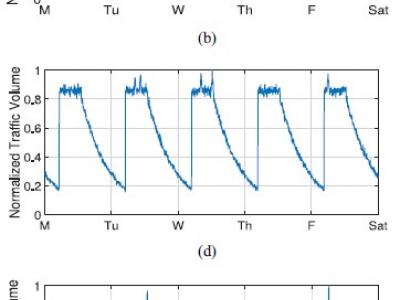

46 ViewsThis dataset provides realistic Internet of Things (IoT) traffic time-series data generated using the novel Tiered Markov-Modulated Stochastic Process (TMMSP) framework. The dataset captures the unique temporal dynamics and stochastic characteristics of three distinct IoT applications: smart city, eHealth, and smart factory systems. Each application's traffic pattern reflects real-world behaviors including human-machine correlation (HMC), sudden data bursts, and application-specific seasonality patterns.

- Categories:

833 Views

833 Views

With the rapid pace of global urbanization and rising energy demands, efficient gas leak detection is vital for public safety. This study proposes an efficient and sensitive gas leak detection method based on reinforcement learning to enhance localization speed and robustness. The approach includes critical area identification, reinforcement learning model training, and leak point localization. Simultaneously introducing noise and missing data to test the robustness of the model.

- Categories:

65 Views

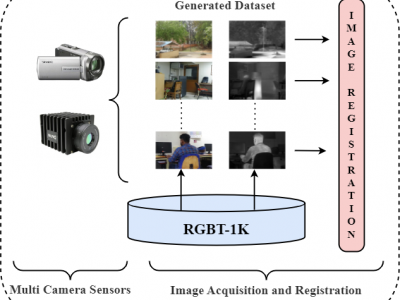

65 ViewsIn this dataset, we present a novel RGB-Thermal paired dataset, RGBT-1K, comprising 1,000 image pairs specifically curated to support research in multi-modality image processing. The dataset captures diverse indoor and outdoor scenes under varying lighting conditions, offering a robust benchmark for applications in image enhancement, object detection, and scene analysis. The image acquisition process involved using the FLIR A70 thermal camera and the Sony Handycam HDR-CX405, with the latter positioned atop the thermal camera for precise alignment.

- Categories:

463 Views

463 ViewsInterference signals degrade and disrupt Global Navigation Satellite System (GNSS) receivers, impacting their localization accuracy. Therefore, they need to be detected, classified, and located to ensure GNSS operation. State-of-the-art techniques employ supervised deep learning to detect and classify potential interference signals. We fuse both modalities only from a single bandwidth-limited low-cost sensor, instead of a fine-grained high-resolution sensor and coarse-grained low-resolution low-cost sensor.

- Categories:

449 Views

449 ViewsJamming devices pose a significant threat by disrupting signals from the global navigation satellite system (GNSS), compromising the robustness of accurate positioning. Detecting anomalies in frequency snapshots is crucial to counteract these interferences effectively. The ability to adapt to diverse, unseen interference characteristics is essential for ensuring the reliability of GNSS in real-world applications. We recorded a dataset with our own sensor station at a German highway with eight interference classes and three non-interference classes.

- Categories:

208 Views

208 Views