Machine Learning

The Human dataset provides a comprehensive collection of drug-target interactions specific to human proteins, aimed at facilitating research in drug discovery and bioinformatics. This dataset includes a diverse range of human proteins as drug targets, along with associated drug molecules and their respective interaction labels. The data consists of molecular descriptors of drugs, protein sequences, and experimentally validated interactions sourced from various biological databases.

- Categories:

69 Views

69 Views

The Human dataset provides a comprehensive collection of drug-target interactions specific to human proteins, aimed at facilitating research in drug discovery and bioinformatics. This dataset includes a diverse range of human proteins as drug targets, along with associated drug molecules and their respective interaction labels. The data consists of molecular descriptors of drugs, protein sequences, and experimentally validated interactions sourced from various biological databases.

- Categories:

33 Views

33 Views

Create Graph:we collected asymmetric drug-drug interaction (DDI) entries from version 5.1.12 of DrugBank, released on March 14, 2024. After a thorough double-check, we removed drugs with incorrect SMILES strings or those that could not be represented by Morgan fingerprints . This filtering resulted in a dataset containing 1,752 drugs and 508,512 asymmetric interactions. Subsequently, we organized the DDI entries into a directed interaction network, where directed edges represent the asymmetric interactions between drugs.

- Categories:

68 Views

68 Views

Training and testing the accuracy of machine learning or deep learning based on cybersecurity applications requires gathering and analyzing various sources of data including the Internet of Things (IoT), especially Industrial IoT (IIoT). Minimizing high-dimensional spaces and choosing significant features and assessments from various data sources remain significant challenges in the investigation of those data sources. The research study introduces an innovative IIoT system dataset called UKMNCT_IIoT_FDIA, that gathered network, operating system, and telemetry data.

- Categories:

688 Views

688 Views

Accurate and spatiotemporal seamless soil moisture (SM) products are important for hydrological drought monitoring and agricultural water management. Currently, physically-based process models with data assimilation are widely used for global seamless SM generation, such as soil moisture active passive level 4 (SMAP L4), the land component of the fifth generation of European Reanalysis (ERA5-land) and Global Land Data Assimilation System Noah (GLDAS-Noah).

- Categories:

42 Views

42 Views

The increasing number of wildfires damages nature and human life, making the early detection of wildfires in complex outdoor environments critical. With the advancement of drones and remote sensing technology, infrared cameras have become essential for wildfire detection. However, as the demand for higher accuracy in detection algorithms grows, the detection model's size and computational costs increase, making it challenging to deploy high-precision detection algorithms on edge computing devices onboard drones for real-time fire detection.

- Categories:

167 Views

167 Views

The PermGuard dataset is a carefully crafted Android Malware dataset that maps Android permissions to exploitation techniques, providing valuable insights into how malware can exploit these permissions. It consists of 55,911 benign and 55,911 malware apps, creating a balanced dataset for analysis. APK files were sourced from AndroZoo, including applications scanned between January 1, 2019, and July 1, 2024. A novel construction method extracts Android permissions and links them to exploitation techniques, enabling a deeper understanding of permission misuse.

- Categories:

629 Views

629 ViewsIn the captured image, a drone is seen in flight, displaying its advanced technological features and capabilities. The image highlights the drone's robust design and aerodynamic structure, which are essential for its diverse applications in research and development. Drones, also known as Unmanned Aerial Vehicles (UAVs), are increasingly being utilized in various fields due to their ability to collect data from hard-to-reach or hazardous areas.

- Categories:

298 Views

298 Views

This datasets include six kinds of data, they are sea surface temperature, sea surface height, sea surface salinity, sea surface density, and current velocity in two directions. These physical variables are obtained from high-resolution observations, which can offer important understanding of the physical processes that affect SST variations. The study area spans from 5N to 5S in latitude and from 160W to 170W in longitude. The data used have a spatial resolution of 0.05 degree.

- Categories:

40 Views

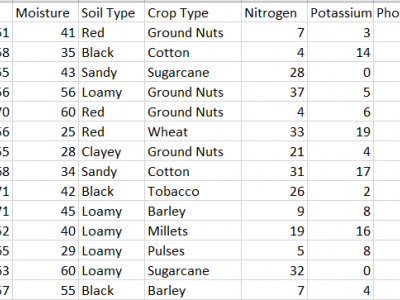

40 ViewsThis dataset provides comprehensive data for predicting the most suitable fertilizer for various crops based on environmental and soil conditions. It includes environmental factors like temperature, humidity, and moisture, along with soil and crop types, and nutrient composition (Nitrogen, Potassium, and Phosphorous). The target variable is the recommended fertilizer name.

The data is already pre-processed without anu Null values.

- Categories:

1741 Views

1741 Views