Computer Vision

This folder consists of codes, dataset, and models.

- Categories:

18 Views

18 Views

Perth-WA is the localization dataset that provides 6DoF annotations in 3D point cloud maps. The data comprises a LiDAR map of 4km square region of Perth Central Business District (CBD) in Western Australia. The scenes contain commercial structures, residential areas, food streets, complex routes, and hospital building etc.

- Categories:

406 Views

406 ViewsThe ability to perceive human facial emotions is an essential feature of various multi-modal applications, especially in the intelligent human-computer interaction (HCI) area. In recent decades, considerable efforts have been put into researching automatic facial emotion recognition (FER). However, most of the existing FER methods only focus on either basic emotions such as the seven/eight categories (e.g., happiness, anger and surprise) or abstract dimensions (valence, arousal, etc.), while neglecting the fruitful nature of emotion statements.

- Categories:

6507 Views

6507 Views

Image coordinates data of AprilTag's four edges

- Categories:

54 Views

54 ViewsFabdepth HMI is designed for hand gesture detection for Human Machine Interaction. It contains total of 8 gestures performed by 150 different individuals. These individuals range from toddlers to senior citizens which adds diversity in this dataset. These gestures are available in 3 different formats namely resized, foreground=-background separated and depth estimated images. Additional aspect is added in terms of video format of 150 samples. Researchers may choose their combination of data modalities based on their application.

- Categories:

271 Views

271 ViewsSolar energy production has grown significantly in recent years in the European Union (EU), accounting for 12\% of the total in 2022. The growth can be attributed to the increasing adoption of solar photovoltaic (PV) panels, which have become cost-effective and efficient means of energy production, supported by government policies and incentives. The maturity of solar technologies has also led to a decrease in the cost of solar energy, making it more competitive with other energy sources.

- Categories:

886 Views

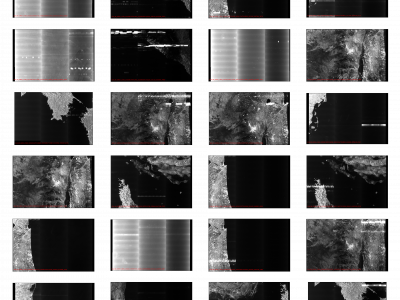

886 ViewsSynthetic Aperture Radar (SAR) satellite images are used increasingly more for Earth observation. While SAR images are useable in most conditions, they occasionally experience image degradation due to interfering signals from external radars, called Radio Frequency Interference (RFI). RFI affected images are often discarded in further analysis or pre-processed to remove the RFI.

- Categories:

240 Views

240 Views

As a common dataset for change detection, its image can be divided into three parts: the image before the change, the image after the change, and the label image showing the changed area. This dataset is characterized by significant seasonal differences between bi-temporal image pairs, which makes up for some of the deficiencies in existing datasets. The labels for this dataset include some irregular changes, such as the appearance and disappearance of cars; but do not include seasonal changes, such as changes in the ground surface caused by snowfall.

- Categories:

910 Views

910 ViewsOne of the most consequential creations in the human evolution phase is handwriting. Due to writing, today we are conveying our reflections, making business pacts, rendering an understandable world and making hitherto tasks austerer. Determining gender using offline handwriting is an applied research problem in forensics, psychology, and security applications, and with technological evolution, the need is growing. The general problem of gender detection from handwriting poses many difficulties resulting from interpersonal and intrapersonal differences.

- Categories:

1137 Views

1137 Views