Computer Vision

The existing datasets lack the diversity required to train the model so that it performs equally well in real fields under varying environmental conditions. To address this limitation, we propose to collect a small number of in-field data and use the GAN to generate synthetic data for training the deep learning network. To demonstrate the proposed method, a maize dataset 'IIITDMJ_Maize' was collected using a drone camera under different weather conditions, including both sunny and cloudy days. The recorded video was processed to sample image frames that were later resized to 224 x 224.

- Categories:

577 Views

577 Views

Scene text detection and recognition have attracted much attention in recent years because of their potential applications. Detecting and recognizing texts in images may suffer from scene complexity and text variations. Some of these problematic cases are included in popular benchmark datasets, but only to a limited extent. In this work, we investigate the problem of scene text detection and recognition in a domain with extreme challenges.

- Categories:

120 Views

120 Views

Scene text detection and recognition have attracted much attention in recent years because of their potential applications. Detecting and recognizing texts in images may suffer from scene complexity and text variations. Some of these problematic cases are included in popular benchmark datasets, but only to a limited extent. In this work, we investigate the problem of scene text detection and recognition in a domain with extreme challenges.

- Categories:

40 Views

40 ViewsThe IAMCV Dataset was acquired as part of the FWF Austrian Science Fund-funded Interaction of Autonomous and Manually-Controlled Vehicles project. It is primarily centred on inter-vehicle interactions and captures a wide range of road scenes in different locations across Germany, including roundabouts, intersections, and highways. These locations were carefully selected to encompass various traffic scenarios, representative of both urban and rural environments.

- Categories:

523 Views

523 Views

This dataset, referred to as LIED (Light Interference Event Dataset), is showcased in the article titled 'Identifying Light Interference in Event-Based Vision'. We proposed the LIED, it has three categories of light interference, including strobe light sources, non-strobe light sources and scattered or reflected light. Moreover, to make the datasets contain more realistic scenarios, the datasets include the dynamic objects and the situation of camera static and the camera moving. LIED was recorded by the DAVIS346 sensor. It provides both frame and events with the resolution of 346 * 260.

- Categories:

95 Views

95 Views

The dataset comprises a diverse collection of images featuring windows alongside various artificial light sources, such as bulbs, LEDs, and tube lights. Each image captures the interplay of natural and artificial illumination, offering a rich visual spectrum that encompasses different lighting scenarios. This compilation is invaluable for applications ranging from architectural design and interior decor to computer vision and image processing.

- Categories:

117 Views

117 Views

Investigating how people perceive virtual reality videos in the wild (i.e., those captured by everyday users) is a crucial and challenging task in VR-related applications due to complex authentic distortions localized in space and time. Existing panoramic video databases only consider synthetic distortions, assume fixed viewing conditions, and are limited in size. To overcome these shortcomings, we construct the VR Video Quality in the Wild (VRVQW) database, which is one of the first of its kind, and contains 502 user-generated videos with diverse content and distortion characteristics.

- Categories:

120 Views

120 ViewsSTP dataset is a dataset for Arabic text detection on traffic panels in the wild. It was collected from Tunisia in “Sfax” city, the second largest Tunisian city after the capital. A total of 506 images were gathered through manual collection one by one, with each image energizing Arabic text detection challenges in natural scene images according to real existing complexity of 15 different routes in addition to ring roads, roundabouts, intersections, airport and highways.

- Categories:

244 Views

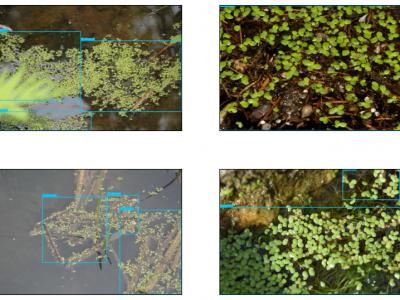

244 ViewsDeficient domestic wastewater management, industrial waste, and floating debris are some leading factors that contribute to inland water pollution. The surplus of minerals and nutrients in overly contaminated zones can lead to the invasion of different invasive weeds. Lemnaceae, commonly known as duckweed, is a family of floating plants that has no leaves or stems and forms dense colonies with a fast growth rate. If not controlled, duckweed establishes a green layer on the surface and depletes fish and other organisms of oxygen and sunlight.

- Categories:

188 Views

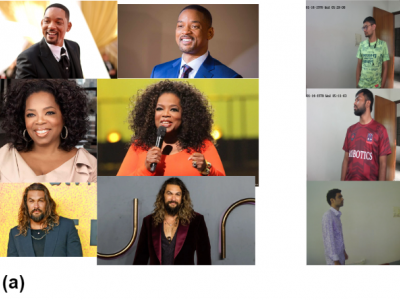

188 ViewsFaceEngine is a face recognition database for using in CCTV based video surveillance systems. This dataset contains high-resolution face images of around 500 celebrities. It also contains images captured by the CCTV camera. Against each person folder, there are more than 10 images for that person. Face features can be extracted from this database. Also, there are test videos in the dataset that can be used to test the system. Each unique ID contains high resolution images that might help CCTV surveillance system test or training face detection model.

- Categories:

1053 Views

1053 Views