.csv

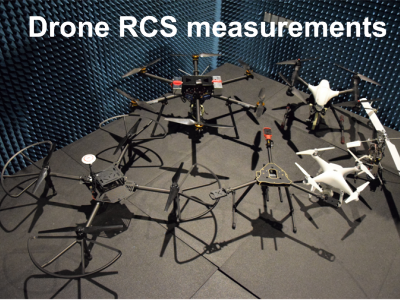

The dataset contains measurement results of Radar Cross Section of different Unmanned Aerial Vehicles at 26-40 GHz. The measurements have been performed fro quasi-monostatic case (when the transmitter and receiver are spatially co-located) in the anechoic chamber. The data shows how radio waves are scattered by different UAVs at the specified frequency range.

- Categories:

8195 Views

8195 Views

We introduce a dataset concerning electric-power consumption-related features registed in seven main municipalities of Nariño, Colombia, from 2010 to 2016. The dataset consists of 4423 socio-demographic characteristics, and 6 power-consumption-referred measured values. Data were fully collected by the company Centrales Eléctricas de Nariño (CEDNEAR) according to the client consumption records.

- Categories:

932 Views

932 Views

Traditional Static Timing Analysis (STA) assumes only single input switches at a time with the side input held at non-controlling value. This introduces unnecessary pessimism or optimism which may cause degradation of performance or chip failure. Modeling Multi-Input Switching (MIS) requires a good amount of simulations hence we provide a dataset comprising of SPICE simulations done on 2 input NAND and NOR gate.

- Categories:

294 Views

294 ViewsThe development of technology also influences changes in campaign patterns. Campaign activities are part of the process of Election of Regional Heads. The aim of the campaign is to mobilize public participation, which is carried out directly or through social media. Social media becomes a channel for interaction between candidates and their supporters. Interactions that occur during the campaign period can be one indicator of the success of the closeness between voters and candidates. This study aims to get the pattern of campaign interactions that occur on Twitter social media channels.

- Categories:

251 Views

251 ViewsThis dataset is part of my PhD research on malware detection and classification using Deep Learning. It contains static analysis data: Top-1000 imported functions extracted from the 'pe_imports' elements of Cuckoo Sandbox reports. PE malware examples were downloaded from virusshare.com. PE goodware examples were downloaded from portableapps.com and from Windows 7 x86 directories.

- Categories:

7592 Views

7592 ViewsThis dataset is part of my PhD research on malware detection and classification using Deep Learning. It contains static analysis data: Raw PE byte stream rescaled to a 32 x 32 greyscale image using the Nearest Neighbor Interpolation algorithm and then flattened to a 1024 bytes vector. PE malware examples were downloaded from virusshare.com. PE goodware examples were downloaded from portableapps.com and from Windows 7 x86 directories.

- Categories:

3359 Views

3359 ViewsThis dataset is part of my PhD research on malware detection and classification using Deep Learning. It contains static analysis data (PE Section Headers of the .text, .code and CODE sections) extracted from the 'pe_sections' elements of Cuckoo Sandbox reports. PE malware examples were downloaded from virusshare.com. PE goodware examples were downloaded from portableapps.com and from Windows 7 x86 directories.

- Categories:

3079 Views

3079 Views

Dataset consists of various open GIS data from the Netherlands as Population Cores, Neighbhourhoods, Land Use, Neighbourhoods, Energy Atlas, OpenStreetMaps, openchargemap and charging stations. The data was transformed for buffers with 350m around each charging stations. The response variable is binary popularity of a charging pool.

- Categories:

1437 Views

1437 Views

Age, fasting and postprandial glucose and insulin levels of 3218 venezuelan women.

The data was retrieved in the Clinical Research Laboratory of the Caracas University Hospital, Venezuela, between 2009 and 2013

- Categories:

1010 Views

1010 Views