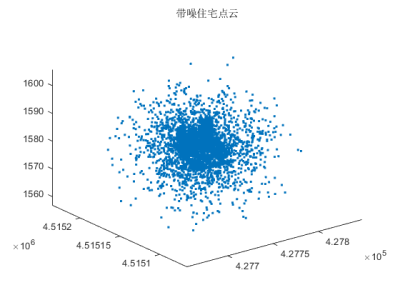

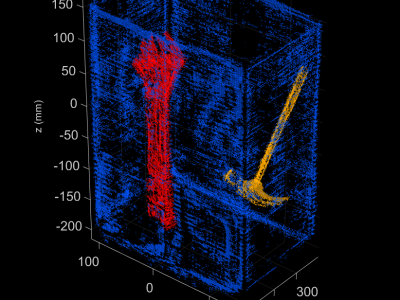

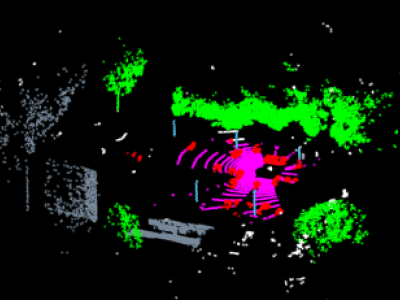

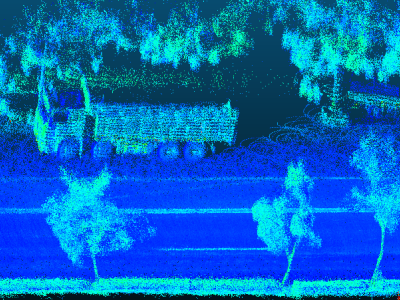

Pavement planar coefficients are critical for a wide range of civil engineering applications, including 3D city modeling, extraction of pavement design parameters, and assessment of pavement conditions. However, existing plane fitting methods often struggle to maintain accuracy and stability in complex road environments, particularly when the point cloud is affected by non-pavement objects such as trees, curbstones, pedestrians, and vehicles. This paper presents REoPC, a robust two-stage estimation method based on road point clouds acquired using hybrid solid-state LiDAR.

- Categories: