Semantic Segmentation

This work presents a specialized dataset designed to advance autonomous navigation in hiking trail and off-road natural environments. The dataset comprises over 1,250 images (640x360 pixels) captured using a camera mounted on a tele-operated robot on hiking trails. Images are manually labeled into eight terrain classes: grass, rock, trail, root, structure, tree trunk, vegetation, and rough trail. The dataset is provided in its original form without augmentations or resizing, allowing end-users flexibility in preprocessing.

- Categories:

607 Views

607 ViewsThis dataset, titled "Synthetic Sand Boil Dataset for Levee Monitoring: Generated Using DreamBooth Diffusion Models," provides a comprehensive collection of synthetic images designed to facilitate the study and development of semantic segmentation models for sand boil detection in levee systems. Sand boils, a critical factor in levee integrity, pose significant risks during floods, necessitating accurate and efficient monitoring solutions.

- Categories:

344 Views

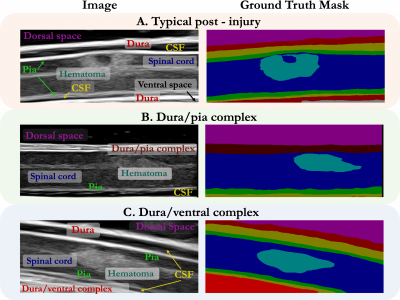

344 ViewsWhile deep learning has catalyzed breakthroughs across numerous domains, its broader adoption in clinical settings is inhibited by the costly and time-intensive nature of data acquisition and annotation. To further facilitate medical machine learning, we present an ultrasound dataset of 10,223 Brightness-mode (B-mode) images consisting of sagittal slices of porcine spinal cords (N=25) before and after a contusion injury.

- Categories:

171 Views

171 Views

We generated an IV fluid-specific dataset to maximize the accuracy of the measurement. We developed our system as a smartphone application, utilizing the internal camera for the nurses or patients. Thus, users should be able to capture the surface of the fluid in the container by adjusting the smartphone's position or angle to reveal the front view of the container. Thus, we collected the front view of the IV fluid containers when generating the training dataset.

- Categories:

20 Views

20 Views

When fuel materials for high-temperature gas-cooled nuclear reactors are quantification tested, significant analysis is required to establish their stability under various proposed accident scenarios, as well as to assess degradation over time. Typically, samples are examined by lab assistants trained to capture micrograph images used to analyze the degradation of a material. Analysis of these micrographs still require manual intervention which is time-consuming and can introduce human-error.

- Categories:

279 Views

279 ViewsCAD-EdgeTune dataset is acquired using a Husarion ROSbot 2.0 and ROSbot 2.0 Pro with the collection speed set to 5 frames per second from a suburban university environment. We may split the information into subgroups for noon, dusk, and dawn in order to depict our surroundings under various lighting situations. We have assembled 17 sequences totaling 8080 frames, of which 1619 have been manually analyzed using an open-source pixel annotation program. Since nearby photographs are highly similar to one another, we decide to annotate every five images.

- Categories:

178 Views

178 ViewsWe release MarsData-V2, a rock segmentation dataset of real Martian scenes for the training of deep networks, extended from our previously published MarsData. The raw unlabeled RGB images of MarsData-V2 are from here, which were collected by a Mastcam camera of the Curiosity rover on Mars between August 2012 and November 2018.

- Categories:

1696 Views

1696 ViewsThe dataset contains UAV imagery and fracture interpretation of rock outcrops acquired in Praia das Conchas, Cabo Frio, Rio de Janeiro, Brazil. Along with georeferenced .geotiff images, the dataset contains filtered 500 x 500 .png tiles containing only scenes with fracture data, along with .png binary masks for semantic segmentation and original georeferenced shapefile annotations. This data can be useful for segmentation and extraction of geological structures from UAV imagery, for evaluating computer vision methodologies or machine learning techniques.

- Categories:

589 Views

589 Views