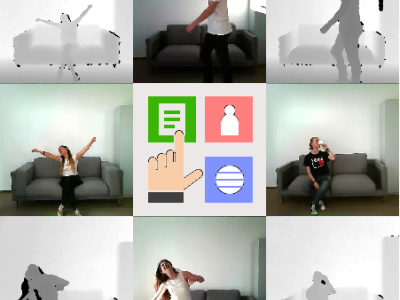

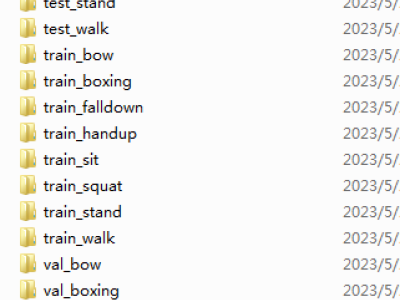

Our dataset has a total of 8 actions, 7 people(P1-P7), and 3 experimental environments(Room-A,Room-B,Room-C). There are a total of 3 directions in each environment, with 5 samples of each action taken for each person in each direction, so the number of samples is 360(samples/person)*7 = 2520.

- Categories: