PRECIS HAR

- Citation Author(s):

-

Irina Mocanu (University POLITEHNICA of Bucharest)Bogdan Cramariuc (IT Center for Science and Technology)

- Submitted by:

- Ana-Cosmina Popescu

- Last updated:

- DOI:

- 10.21227/mene-ck48

- Data Format:

3257 views

3257 views

- Categories:

- Keywords:

Abstract

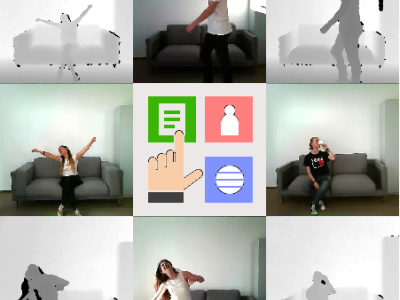

PRECIS HAR represents a RGB-D dataset for human activity recognition, captured with the 3D camera Orbbec Astra Pro. It consists of 16 different activities (stand up, sit down, sit still, read, write, cheer up, walk, throw paper, drink from a bottle, drink from a mug, move hands in front of the body, move hands close to the body, raise one hand up, raise one leg up, fall from bed, and faint), performed by 50 subjects. There are 800 videos in total, having both RGB and depth recordings.

The dataset does not contain other people than the video subject that appear as environmental noise. Besides, the background does not change. However, its difficulties are:

Small camera occlusions: from time to time, we added small occlusions to the RGB stream (we move the mouse from a part of the screen to the other), that simulate the presence of external, uncontrolled environment factors; for example, in a real scenario, a fly could pass in front of the camera.

No stage directions: while filming the dataset, we did not instruct participants on how to perform each action. We simply told them the name of the action. Thus, they all interpreted them slightly differently, closer to the natural way in which a person would behave.

Variable video length: because we did not impose constraints on how the participants had to perform each action, dataset videos have different lengths.

- Different lighting conditions: the source of light is variable, sometimes having scenes with pale light and other times with sun shining directly on scene parts. These lighting variations are however only visible in the RGB stream. The depth stream is not affected by environmental changes, as far as the light is concerned. We thus want to emphasize the utility of also examining the depth stream, while classifying videos from RGB-D cameras.

When citing this dataset, please cite both the dataset and the article that introduces it: Fusion Mechanisms for Human Activity Recognition using Automated Machine Learning. Instructions will be added here once the article is published.

Instructions:

The dataset consists of RGB data (.mp4 files) and depth data (.oni files). We provide both cropped and raw versions. The cropped videos are shorter, containing only the seconds of interest, i.e. where the activity is performed. The raw videos are longer, containing all the video that we captured while filming the dataset. We included both variants, because they can all be useful for different applications.

Video names follow the pattern <subject_id>_<activity_id>.<extension>, where:

<subject_id> is an integer between 1 and 50;

<activity_id> is an integer between 1 and 16, with the following mapping: 1 = stand up, 2 = sit down, 3 = sit still, 4 = read, 5 = write, 6 = cheer up, 7 = walk, 8 = throw paper, 9 = drink from a bottle, 10 = drink from a mug, 11 = move hands in front of the body, 12 = move hands close to the body, 13 = raise one hand up, 14 = raise one leg up, 15 = fall from bed, 16 = faint;

<extension> is .mp4 or .oni, depending on the type of data (RGB or depth).

In order to manipulate .oni files, we recommend using pyoni.