Wearable Sensing

The Clarkson University Affective Data Set (CUADS) is a multi-modal affective dataset designed to assist in machine learning model development for automated emotion recognition. CUADS provides electrocardiogram, photoplethysmogram, and galvanic skin response data from 38 participants, captured under controlled conditions using Shimmer3 ECG and GSR sensors. ECG, GSR and PPG signals were recorded while each participant viewed and rated 20 affective movie clips. CUADS also provides big five personality traits for each participant.

- Categories:

172 Views

172 Views

This dataset contains human motion data collected using inertial measurement units (IMUs), including accelerometer and gyroscope readings, from participants performing specific activities. The data was gathered under controlled conditions with verbal informed consent and includes diverse motion patterns that can be used for research in human activity recognition, wearable sensor applications, and machine learning algorithm development. Each sample is labeled and processed to ensure consistency, with raw and augmented data available for use.

- Categories:

68 Views

68 ViewsWith the continuous advancement of technology, small and portable physiological sensors that can be worn on the body are quietly integrating into our daily lives, and are expected to greatly enhance our quality of life. In order to further enrich and expand the emotional physiological signals captured by portable wearable devices, we utilized the 14-channel portable EEG acquisition device Emotiv EPOC X, and with emotional video clips as the stimulus source, we collected two sets of emotional EEG signals from two groups of 10 participants each, named EmoX1 and EmoX2.

- Categories:

273 Views

273 ViewsCurrently, existing public datasets based on peripheral physiological signals are limited, and there is a lack of emotion recognition (ER) datasets specifically customized for smart classroom scenarios. Therefore, we have collected and constructed the I+ Lab Emotion (ILEmo) dataset, which is specifically designed for the emotion monitoring of students in classroom. The raw data of the ILEmo dataset is collected by the I+ Lab at Shandong University, using custom multi-modal wristbands and computing suites.

- Categories:

232 Views

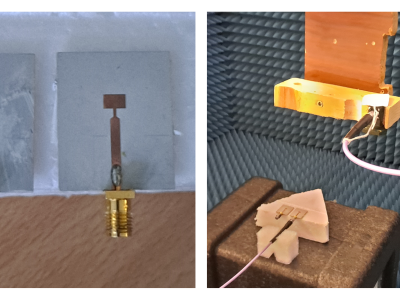

232 ViewsThis dataset corresponds to the measurements of two microstrip patch antennas, collected using the facility described in [1]. The available measurements contained within the dataset allow a complete characterization of the field radiated by these antennas. These fields can be introduced in enhanced microwave imaging algorithms that consider the field radiated by the transmitting and receiving antennas of the microwave imaging system [2] (modified Delay and Sum algorithm), [3] (modified Phase Shift Migration imaging algorithm).

- Categories:

158 Views

158 Views

PPE Usage Dataset

This repository provides the Personal Protective Equipment (PPE) Usage Dataset, designed for training deep neural networks (DNNs). The dataset was collected using the EFR32MG24 microcontroller and the ICM-20689 inertial measurement unit, which features a 3-axis gyroscope and a 3-axis accelerometer.

The dataset includes data for four types of PPE: helmet, shirt, pants, and boots, categorized into three activity classes: carrying, still, and wearing.

- Categories:

65 Views

65 Views

Two publicly available datasets, the PASS and EmpaticaE4Stress databases, were utilised in this study. They were chosen because they both used the same Empatica E4 device, which allowed the acquisition of a variety of signals, including PPG and EDA. The dataset consists of in 1587 30-second PPG segments. Each segment has been filtered and normalized using a 0.9–5 Hz band-pass and min-max normalization scheme.

- Categories:

170 Views

170 ViewsProlonged sitting in a single position adversely affects the spine and leads to chronic issues that require extended therapy for recovery. The principal motivation of this study is to ensure good posture, i.e. when a person's body is positioned correctly and supported by the appropriate level of muscle tension. It draws people's attention to the need for good sitting posture and health. Commercially available wearable sensors provide several advantages as the sensors can be embedded with the clothing.

- Categories:

237 Views

237 ViewsThis dataset contains inertial sensor and optical motion capture data from a trial of 20 healthy adult participants performing various upper limb movements. Each subject had an IMU and cluster of relfective markers attached to their sternum, right upper arm, and right forearm (as in the image attached), and IMU and marker data was recorded simultaneously. This trial was carried out with the intention of investigating alternative sensor-to-segment calibration methods, but may be useful for other areas of inertial sensor research. CAD files for the 3D printed mounts are also included.

- Categories:

494 Views

494 Views

This dataset contains electrocardiography, electromyography, accelerometer, gyroscope and magnetometer signals that were measured in different scenarios using wearable equipment on 13 subjects:

- Weight movement in a horizontal position at an angle of approximately 45°.

- Vertical movement of the weights from the table to the floor and back.

- Moving the weights vertically from the table to the head and back.

- Rotational movement of the wrist while holding the weights with the arm extended, see Figure ~\ref{fig2}.

- Categories:

63 Views

63 Views