Computer Vision

Defect pattern recognition (DPR) of wafer maps is critical for determining the root cause of production defects, which can provide insights for the yield improvement in wafer foundries. During wafer fabrication, several types of defects can be coupled together in a piece of wafer, it is called mixed-type defects DPR. To detect mixed-type defects is much more complicated because the combination of defects may vary a lot, from the type of defects, position, angle, number of defects, etc. Deep learning methods have been a good choice for complex pattern recognition problems.

- Categories:

86 Views

86 Views

MapData is a globally diverse dataset spanning 233 geographic sampling points. It offers original high-resolution images ranging from 7,000×5,000 to 20,000×15,000 pixels. After rigorous cleaning, the dataset provides 121,781 aligned electronic map–visible image pairs (each standardized to 512×512 pixels) with hybrid manual-automated ground truth—addressing the scarcity of scalable multimodal benchmarks.

- Categories:

47 Views

47 ViewsThis dataset comprises 32-bit floating-point SAR images in TIFF format, capturing coastal regions. It includes corresponding ground truth masks that differentiate between land and water areas. The covered regions include the Netherlands, London, Ireland, Spain, France, Lisbon, the USA, India, Africa, and Italy. The SAR images were acquired in Interferometric Wide (IW) mode with dual polarization at a spatial resolution of 10m × 10m.

- Categories:

309 Views

309 ViewsThe TUROS-TS encompasses 5,357 Google Street View images with 8,775 traffic sign instances covering 9 categories and 28 classes. Three subsets of the dataset were created: test (10%-1050 images 579), validation (20% -1050 images), and training (70% - 3728 images). It is available upon request. If you want to train and test the data set. Please send an email to afef.zwidi@regim.usf.tn

- Categories:

67 Views

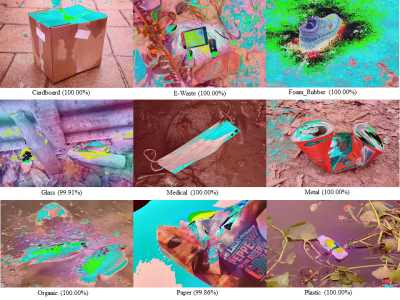

67 ViewsAn automatic waste classification system embedded with higher accuracy and precision of convolution neural network (CNN) model can significantly the reduce manual labor involved in recycling. The ConvNeXt architecture has gained remarkable improvements in image recognition. A larger dataset, called TrashNeXt, comprising 23,625 images across nine categories has been introduced in this study by combining and thoroughly analyzing various pre-existing datasets.

- Categories:

389 Views

389 ViewsThe IARPA Space-Based Machine Automated Recognition Technique (SMART) program was one of the first large-scale research program to advance the state of the art for automatically detecting, characterizing, and monitoring large-scale anthropogenic activity in global scale, multi-source, heterogeneous satellite imagery. The program leveraged and advanced the latest techniques in artificial intelligence (AI), computer vision (CV), and machine learning (ML) applied to geospatial applications.

- Categories:

198 Views

198 ViewsThe Dash Cam Video Dataset is a comprehensive collection of real-world road footage captured across various Indian roads, focusing on lane conditions and traffic dynamics. Indian roads are often characterized by inconsistent lane markings, unstructured traffic flow, and frequent obstructions, making lane detection and traffic identification a challenging task for autonomous vehicle systems.

- Categories:

484 Views

484 Views

OpenGL is a library for doing computer graphics.By using it, we can create interactive applications which

render high-quality color images composed of 3D geometric objects and images. OpenGL is window and

operating system independent. As such, the part of our application which does rendering is platform inde-

pendent.However, in order for OpenGLto be able to render, it needs awindow to draw into. Generally, The

Project OpenGL Ludo-Board Game is a computer graphics project. The computer graphics project used

- Categories:

25 Views

25 Views