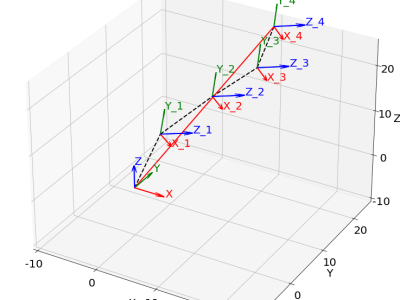

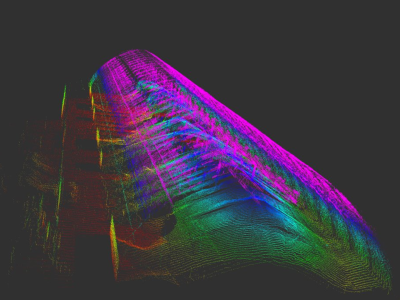

This dataset comprises three degrees of freedom (3 DOF) sensory data and simulation data collected from a Kinova robotic arm. The sensory data includes real-time measurements from the robotic arm’s joint positions, velocities, and torques, providing a detailed account of the arm’s dynamic behavior. The dataset also includes simulated data generated using a high-fidelity physics engine, accurately modeling the Kinova arm’s kinematics and dynamics under various operational scenarios.

- Categories: