Robotics

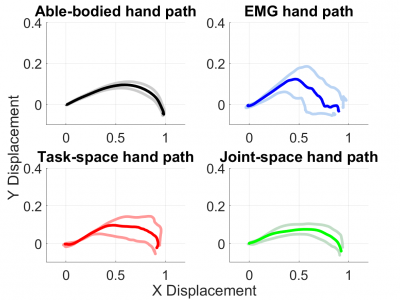

Synergistic prostheses enable the coordinated movement of the human-prosthetic arm, as required by activities of daily living. This is achieved by coupling the motion of the prosthesis to the human command, such as residual limb movement in motion-based interfaces. Previous studies demonstrated that developing human-prosthetic synergies in joint-space must consider individual motor behaviour and the intended task to be performed, requiring personalisation and task calibration.

- Categories:

448 Views

448 ViewsEgocentric vision is important for environment-adaptive control and navigation of humans and robots. Here we developed ExoNet, the largest open-source dataset of wearable camera images of real-world walking environments. The dataset contains over 5.6 million RGB images of indoor and outdoor environments, which were collected during summer, fall, and winter. 923,000 of the images were human-annotated using a 12-class hierarchical labelling architecture.

- Categories:

6168 Views

6168 ViewsWe introduce a new robotic RGBD dataset with difficult luminosity conditions: ONERA.ROOM. It comprises RGB-D data (as pairs of images) and corresponding annotations in PASCAL VOC format (xml files)

It aims at People detection, in (mostly) indoor and outdoor environments. People in the field of view can be standing, but also lying on the ground as after a fall.

- Categories:

459 Views

459 ViewsThese datasets are of the hydraulically actuated robot HyQ’s proprioceptive sensors. They include absolute and relative encoders, force and torque sensors, and MEMS-based and fibre optic-based inertial measurement units (IMUs). Additionally, a motion capture system recorded the ground truth data with millimetre accuracy. In the datasets HyQ was manually controlled to trot in place or move around the laboratory. The sequence includes: forward and backwards motion, side-to-side motion, zig-zags, yaw motion, and a mix of linear and yaw motion.

- Categories:

1730 Views

1730 ViewsIn recent years, researchers have explored human body posture and motion to control robots in more natural ways. These interfaces require the ability to track the body movements of the user in three dimensions. Deploying motion capture systems for tracking tends to be costly and intrusive and requires a clear line of sight, making them ill adapted for applications that need fast deployment. In this article, we use consumer-grade armbands, capturing orientation information and muscle activity, to interact with a robotic system through a state machine controlled by a body motion classifier.

- Categories:

361 Views

361 Views