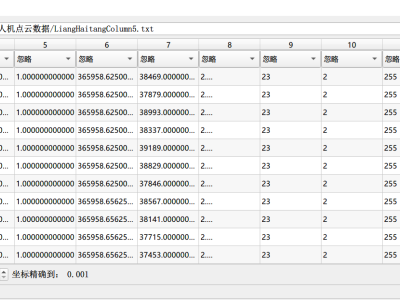

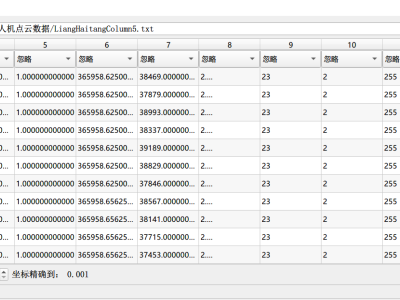

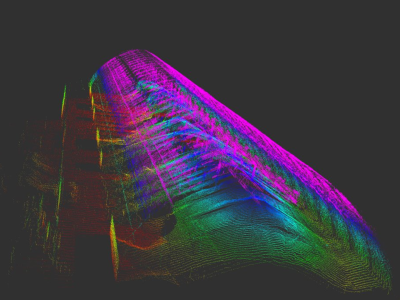

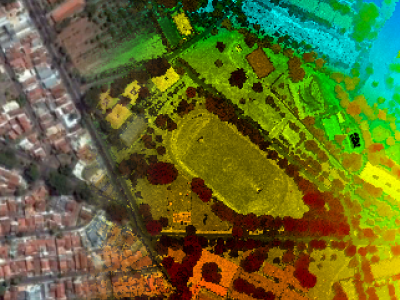

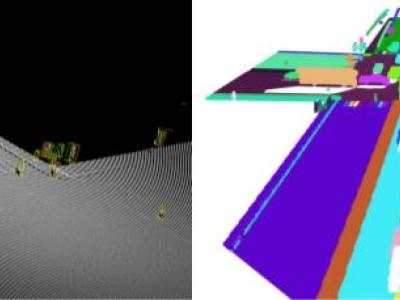

This paper is released with our paper titled “Annotated 3D Point Cloud Dataset for Traffic Management in Simulated Urban Intersections”. This paper proposed a 3D simulation based approach for generating an elevated LiDAR based 3D point cloud dataset simulating traffic in road intersections using Blender. We generated randomized and controlled traffic scenarios of vehicles and pedestrians movement around and within the intersection area, representing various scenarios. The dataset has been annotated to support 3D object detection and instance segmentation tasks.

- Categories: