computer vision

The advancement of machine and deep learning methods in traffic sign detection is critical for improving road safety and developing intelligent transportation systems. However, the scarcity of a comprehensive and publicly available dataset on Indian traffic has been a significant challenge for researchers in this field. To reduce this gap, we introduced the Indian Road Traffic Sign Detection dataset (IRTSD-Datasetv1), which captures real-world images across diverse conditions.

- Categories:

1718 Views

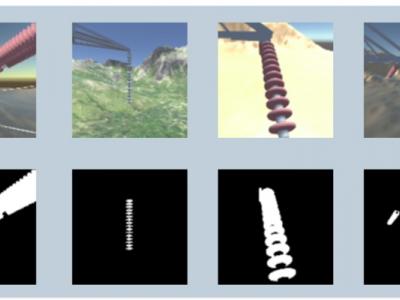

1718 ViewsThis database contains Synthetic High-Voltage Power Line Insulator Images.

There are two sets of images: one for image segmentation and another for image classification.

The first set contains images with different types of materials and landscapes, including the following landscape types: Mountains, Forest, Desert, City, Stream, Plantation. Each of the above-mentioned landscape types consists of 2,627 images per insulator type, which can be Ceramic, Polymeric or made of Glass, with a total of 47,286 distinct images.

- Categories:

604 Views

604 Views

Evaluation of human gait through smartphone-based pose estimation algorithms provides an attractive alternative to costly lab-bound instrumented assessment and offers a paradigm shift with real time gait capture for clinical assessment. Systems based on smart phones, such as OpenPose and BlazePose have demonstrated potential for virtual motion assessment but still lack the accuracy and repeatability standards required for clinical viability. Seq2seq architecture offers an alternative solution to conventional deep learning techniques for predicting joint kinematics during gait.

- Categories:

149 Views

149 Views

This is the relevant data in "Monocular Homography Estimation and Positioning Method for the Spatial-Temporal Distribution of Vehicle Loads Identification".

- Categories:

151 Views

151 ViewsThis study presents an automated approach for the generation of graphs from hand-drawn electrical circuit diagrams, aiming to streamline the digitization process and enhance the efficiency of traditional circuit design methods. Leveraging image processing, computer vision algorithms, and machine learning techniques, the system accurately identifies and extracts circuit components, capturing spatial relationships and diverse drawing styles.

- Categories:

738 Views

738 ViewsAn understanding of local walking context plays an important role in the analysis of gait in humans and in the high level control systems of robotic prostheses. Laboratory analysis on its own can constrain the ability of researchers to properly assess clinical gait in patients and robotic prostheses to function well in many contexts, therefore study in diverse walking environments is warranted. A ground-truth understanding of the walking terrain is traditionally identified from simple visual data.

- Categories:

306 Views

306 ViewsThe temporal variability in calving front positions of marine-terminating glaciers permits inference on the frontal ablation. Frontal ablation, the sum of the calving rate and the melt rate at the terminus, significantly contributes to the mass balance of glaciers. Therefore, the glacier area has been declared as an Essential Climate Variable product by the World Meteorological Organization. The presented dataset provides the necessary information for training deep learning techniques to automate the process of calving front delineation.

- Categories:

223 Views

223 ViewsRecognizing and categorizing banknotes is a crucial task, especially for individuals with visual impairments. It plays a vital role in assisting them with everyday financial transactions, such as making purchases or accessing their workplaces or educational institutions. The primary objectives for creating this dataset were as follows:

- Categories:

337 Views

337 Views

We generated an IV fluid-specific dataset to maximize the accuracy of the measurement. We developed our system as a smartphone application, utilizing the internal camera for the nurses or patients. Thus, users should be able to capture the surface of the fluid in the container by adjusting the smartphone's position or angle to reveal the front view of the container. Thus, we collected the front view of the IV fluid containers when generating the training dataset.

- Categories:

18 Views

18 Views