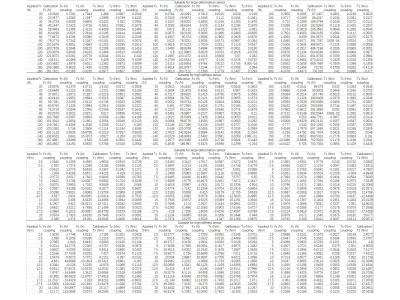

Calibration comparison data, including data obtained by calibrating the large deformation sensor using the proposed calibration device suitable for large deformation six-dimensional force sensors, and data obtained by calibrating the same large deformation six-dimensional force sensor using the calibration device suitable for high rigidity six-dimensional force sensors.

- Categories: