Image Processing

This dataset is a collection of images and their respective labels containing multiple Indian coins of different denominations and their variations. The dataset only contains images of one side of each coin (Tail side) which contains the denomination value.

The samples were collected with the help of a mobile phone while the coins were placed on top of a white sheet of A4-sized paper.

- Categories:

3366 Views

3366 Views

A crowdsourcing-based subjective evaluation of viewport images, rendered from ten omnidirectional images in equirectangular format (ERI), obtained with Pannini projection was conducted, aiming to assess the perceptual impact of the object shape deformation, introduced due to sphere-to-plane projections. More details on the Pannini projection, rendered viewports, and subjective test procedure can be found in [1].

- Categories:

90 Views

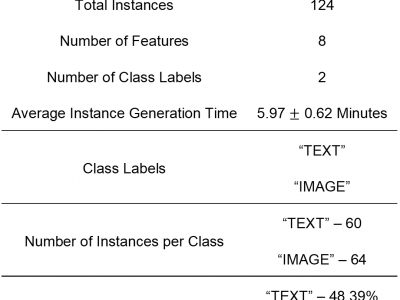

90 ViewsHuman intention is an internal, mental characterization for acquiring desired information. From

interactive interfaces, containing either textual or graphical information, intention to perceive desired

information is subjective and strongly connected with eye gaze. In this work, we determine such intention by

analyzing real-time eye gaze data with a low-cost regular webcam. We extracted unique features (e.g.,

Fixation Count, Eye Movement Ratio) from the eye gaze data of 31 participants to generate the dataset

- Categories:

634 Views

634 ViewsThis dataset is composed by both real and sythetic images of power transmission lines, which can be fed to deep neural networks training and applied to line's inspection task. The images are divided into three distinct classes, representing power lines with different geometric properties. The real world acquired images were labeled as "circuito_real" (real circuit), while the synthetic ones were identified as "circuito_simples" (simple circuit) or "circuito_duplo" (double circuit). There are 348 total images for each class, 232 inteded for training and 116 aimed for validation/testing.

- Categories:

3426 Views

3426 Views

this is a test

- Categories:

324 Views

324 ViewsResearchers are becoming increasingly interested in dorsal hand vein biometrics because of their characteristics. These characteristics can be summarized as follows: it does not need contact with the capture device, cannot be forged, does not change over time, and provides high accuracy. Recognition systems of the dorsal hand rely on how the collected images captured from the device are good. Near-infrared (NIR) light is used to distinguish veins from the back of the hand.

- Categories:

1283 Views

1283 ViewsThe FLoRI21 dataset provides ultra-widefield fluorescein angiography (UWF FA) images for the development and evaluation of retinal image registration algorithms. Images are included across five subjects. For each subject, there is one montage FA image that serves as the common reference image for registration and a set of two or more individual ("raw") FA images (taken over multiple clinic visits) that are target images for registration. Overall, these constitute 15 reference-target image pairs for image registration.

- Categories:

1482 Views

1482 Views

These simulated live cell microscopy sequences were generated by the CytoPacq web service https://cbia.fi.muni.cz/simulator [R1]. The dataset is composed of 51 2D sequences and 41 3D sequences. The 2D sequences are divided into distinct 44 training and 7 test sets. The 3D sequences are divided into distinct 34 training and 7 test sets. Each sequence contains up to 200 frames.

- Categories:

308 Views

308 ViewsThe dataset contains UAV imagery and fracture interpretation of rock outcrops acquired in Praia das Conchas, Cabo Frio, Rio de Janeiro, Brazil. Along with georeferenced .geotiff images, the dataset contains filtered 500 x 500 .png tiles containing only scenes with fracture data, along with .png binary masks for semantic segmentation and original georeferenced shapefile annotations. This data can be useful for segmentation and extraction of geological structures from UAV imagery, for evaluating computer vision methodologies or machine learning techniques.

- Categories:

543 Views

543 Views

Datasets for image and video aesthetics

1. Video Dataset : 107 videos

This dataset has videos that can be framed into images.

Color contrast,Depth of Field[DoF],Rule of Third[RoT] attributes

that affect aesthetics can be extracted from the video datasets.

2.Slow videos and Fast videos can be assessed for motion

affecting aesthetics

- Categories:

274 Views

274 Views