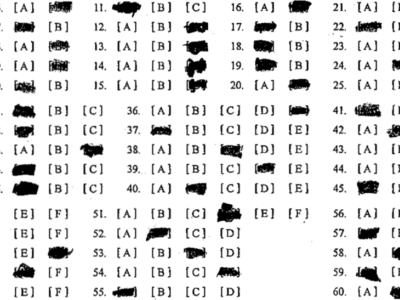

This dataset comprises 2,052 .jpeg image samples from 74 students, offering a comprehensive portrayal of student life. Capturing academic, extracurricular, and social dimensions, it provides insights into diverse learning environments, activities, and interactions. From classrooms to sports fields, cultural events to social gatherings, the dataset encapsulates the multifaceted nature of student experiences. Researchers can utilize these images to explore educational dynamics, analyze social behaviors, and develop algorithms for image recognition and analysis.

- Categories: