Signal Processing

With the accelerating pace of population aging, the urgency and necessity for elderly individuals to control smart home systems have become increasingly evident. Smart homes not only enhance the independence of older adults, enabling them to complete daily activities more conveniently, but also ensure safety through health monitoring and emergency alert systems, thereby reducing the caregiving burden on families and society.

- Categories:

161 Views

161 Views

A novel ultra-low-voltage (ULV) Dual-EdgeTriggered (DET) flip-flop based on the True-Single-PhaseClocking (TSPC) scheme is presented in this paper. Unlike Single-Edge-Triggering (SET), Dual-Edge-Triggering has the advantage of operating at the half-clock rate of the SET clock. We exploit the TSPC principle to achieve the best energy-efficient figures by reducing the overall clock load (only to 8 transistors) and register power while providing fully static, contention-free functionality to satisfy ULV operation.

- Categories:

32 Views

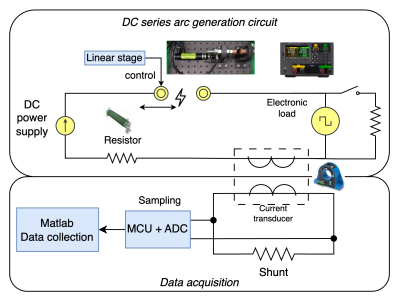

32 ViewsThis dataset is collected to investigate detection algorithms for DC series arc faults on resource constrained devices. The data is current signal collected from test-bench build to simulate arc fault under various conditions according to the standard UL1699B. Different condition includes the power mode regulated by the elctronic load in the system for the simulation of different system dynamics under topology series and parallel.

- Categories:

80 Views

80 ViewsJamming devices present a significant threat by disrupting signals from the global navigation satellite system (GNSS), compromising the robustness of accurate positioning. The detection of anomalies within frequency snapshots is crucial to counteract these interferences effectively. A critical preliminary measure involves the reliable classification of interferences and characterization and localization of jamming devices.

- Categories:

254 Views

254 ViewsJamming devices pose a significant threat by disrupting signals from the global navigation satellite system (GNSS), compromising the robustness of accurate positioning. Detecting anomalies in frequency snapshots is crucial to counteract these interferences effectively. The ability to adapt to diverse, unseen interference characteristics is essential for ensuring the reliability of GNSS in real-world applications. We recorded a dataset with our own sensor station at a German highway with two interference classes and one non-interference class.

- Categories:

292 Views

292 Views

This rolling bearing dataset was collected using the QPZZ-II rotating machinery fault simulation test rig developed by Jiangsu Qianpeng Diagnostic Engineering Co., Ltd. The bearing used in the experiment was model NU205EM. An electromyographic-type acceleration sensor was used in this experiment, which was placed in the horizontal X and vertical Y directions of the bearing housing on the test rig.

- Categories:

152 Views

152 Views

A group of 10 healthy subjects without any upper limb pathologies participated in the data collection process. A total of 8 activities are performed by each subject. The measurement setup consists of a 5-channel Noraxon Ultium wireless sEMG sensor system. Representative muscle sites of the forearm are identified and self-adhesive Ag/AgCl dual electrodes are placed. The signal (sEMG) recorded during an ADL activity is segmented into functional phases: 1) rest 2) action and 3) release. During the rest phase, the subject is instructed to rest the muscles in a natural way.

- Categories:

159 Views

159 Views

The data utilized the IWR1642 FMCW radar and the DCA1000EVM data acquisition board from Texas Instruments. Three different environments—bedroom, shared office, and unoccupied conference room—were selected as potential scenarios for non-contact vital sign monitoring. Continuous monitoring was conducted at distances of 0.5, 1, 1.5, and 2m for 120s. The results were calculated using a 30-second data window, with 91 calculations performed during each monitoring session, starting from the 30th second and continuing until the 120th second.

- Categories:

105 Views

105 Views

This code accompanies the paper titled "An End-to-End Modular Framework for Radar Signal Processing: A Simulation-Based Tutorial," published in IEEE Aerospace and Electronics Systems Magazine (DOI:10.1109/MAES.2023.3334689). The simulation of a complete radar has been performed, and the results have been shown after each stage/module of a radar, enabling the reader to appreciate the impact of the specific process on the radar signal.

- Categories:

221 Views

221 ViewsInterference signals degrade and disrupt Global Navigation Satellite System (GNSS) receivers, impacting their localization accuracy. Therefore, they need to be detected, classified, and located to ensure GNSS operation. State-of-the-art techniques employ supervised deep learning to detect and classify potential interference signals. We fuse both modalities only from a single bandwidth-limited low-cost sensor, instead of a fine-grained high-resolution sensor and coarse-grained low-resolution low-cost sensor.

- Categories:

449 Views

449 Views