Normal 0 false false false EN-US X-NONE AR-SA

- Categories:

Normal 0 false false false EN-US X-NONE AR-SA

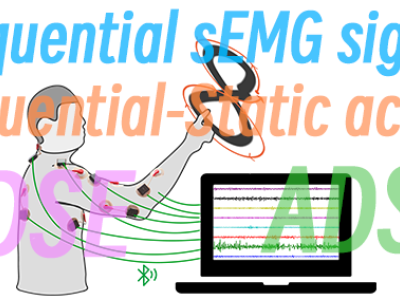

The dataset Arabic Digit Sequential Electromyography (ADSE) is acquired for eight-lead sEMG data targeting sequential signals.

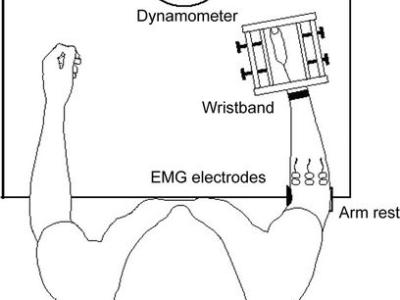

This data was recorded for emg based force/Torque estimation. EMG and torque signals were collected during simultaneous, isometric, but continuously varying contractions, corresponding to two wrist DoF. The experiment was carried out in two trials with a 5-min rest in between. Each trial included six combinations of tasks, separated by 2 min of rest to minimize the effect of fatigue. The performed tasks were categorized into individual and combined (simultaneous) DoF to test the ability to estimate isolated torque and torque in two simultaneous DoF.

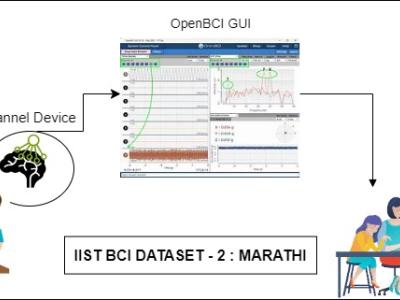

Problems of neurodegenerative disorder patients can be solved by developing Brain-Computer Interface (BCI) based solutions. This requires datasets relevant to the languages spoken by patients. For example, Marathi, a prominent language spoken by over 83 million people in India, lacks BCI datasets based on the language for research purposes. To tackle this gap, we have created a dataset comprising Electroencephalograph (EEG) signal samples of selected common Marathi words.

This data set consists of 16 half-hour EEG recordings, obtained from 10 volunteers, as described below.

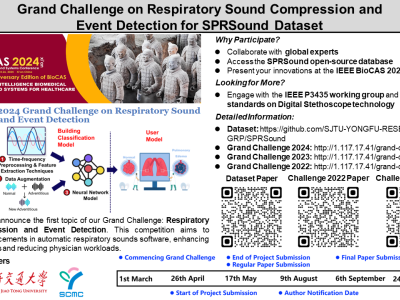

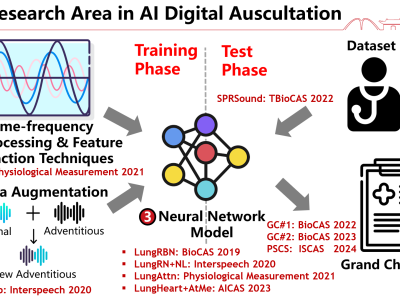

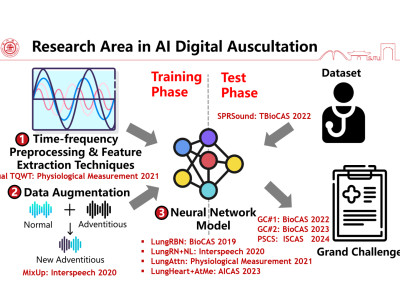

Respiratory diseases are major global killers, demanding early diagnosis for effective management. Digital stethoscopes offer promise, but face limitations in storage and transmission. A compressive sensing-based compression algorithm is needed to address these constraints. Meanwhile, fast-reconstruction CS algorithms are sought to balance speed and fidelity. Sound event detection algorithms are crucial for identifying abnormal lung sounds and augmenting diagnostic accuracy. Integrating these technologies can revolutionize respiratory disease management, enhancing patient outcomes.

Finger Scanning Experiments: Participants conducted experiments while seated and wearing an eye mask to eliminate visual information. They were instructed to horizontally scan the surface back-and-forth eight times with one of their fingers to assess surface roughness. The finger's motion was optically captured at a frame rate of 60 fps. Scanning speed was determined by measuring finger positions at each frame and dividing them by the frame length. Image analysis was performed using OpenCV, where the finger outline was extracted from the video.

Globally, respiratory diseases are the leading cause of death, making it essential to develop an automatic respiratory sounds software to speed up diagnosis and reduce physician workload. A recent line of attempts have been proposed to predict accurately, but they have yet been able to provide a satisfactory generalization performance. In this contest, we invited the community to develop more accurate and generalized respiratory sound algorithms. A starter code is provided to standardize the submissions and lower the barrier.

It has proved that the auscultation of respiratory sound has advantage in early respiratory diagnosis. Various methods have been raised to perform automatic respiratory sound analysis to reduce subjective diagnosis and physicians’ workload. However, these methods highly rely on the quality of respiratory sound database. In this work, we have developed the first open-access paediatric respiratory sound database, SPRSound. The database consists of 2,683 records and 9,089 respiratory sound events from 292 participants.

Abstract— Objective: Pulse oximetry is widely used to measure photoplethysmographic (PPG) signals and blood oxygen saturation but is susceptible to motion artifacts. This is a particular challenge for the growing field of wearable health devices. Independent component analysis (ICA) offers a solution for artifact removal without additional sensors. As there are different approaches for performing an ICA and because artifacts can take many forms, the optimal configuration is unknown.