Artificial Intelligence

The WPT dataset was specially created for "Web Page Tampering Detection Based on Dynamic Temporal Graph Pre-training" and encompasses over 200,000 regular web pages from 75 websites across the finance, healthcare, and education sectors, in addition to 1,541 tampered examples sourced from zone-h.org. This dataset organizes web pages as nodes and their links as edges within a discrete dynamic graph structure, capturing snapshots at various moments in time. Each node integrates structural, textual, and statistical features into a robust 148-dimensional feature vector for every page.

- Categories:

70 Views

70 Views

Weibo and Twitter

1)The Weibo dataset is derived from the Weibo social platform. The collection of true information in this dataset originates from authoritative Chinese sources, while fake information is acquired through the official Weibo rumor suppression system. Each data instance within this dataset comprises both a news text and a corresponding news image.

- Categories:

604 Views

604 Views

The JKU-ITS AVDM contains data from 17 participants performing different tasks with various levels of distraction.

The data collection was carried out in accordance with the relevant guidelines and regulations and informed consent was obtained from all participants.

The dataset was collected using the JKU-ITS research vehicle with automated capabilities under different illumination and weather conditions along a secure test route within the

- Categories:

950 Views

950 Views

This is a dataset about minimizing maritime passenger transfer in ship routing. consists of data on the distance between ports, the number of passengers from the port of origin to the port of destination, ship speed, and the duration of berthing at ports.

- Categories:

745 Views

745 Views

This is a compressed package containing nine multi-label text classification data sets, including AAPD, CitySearch, Heritage, Laptop, Ohsumed, RCV1, Restaurant, Reuters, and Sentihood.

- Categories:

188 Views

188 Views

This data set has been collected from a custom built battery prognostics testbed at the NASA Ames Prognostics Center of Excellence (PCoE). Li-ion batteries were run through 3 different operational profiles (charge, discharge and Electrochemical Impedance Spectroscopy) at different temperatures. Discharges were carried out at different current load levels until the battery voltage fell to preset voltage thresholds. Some of these thresholds were lower than that recommended by the OEM (2.7 V) in order to induce deep discharge aging effects.

- Categories:

3627 Views

3627 Views

With the progress made in speaker-adaptive TTS approaches, advanced approaches have shown a remarkable capacity to reproduce the speaker’s voice in the commonly used TTS datasets. However, mimicking voices characterized by substantial accents, such as non-native English speakers, is still challenging. Regrettably, the absence of a dedicated TTS dataset for speakers with substantial accents inhibits the research and evaluation of speaker-adaptive TTS models under such conditions. To address this gap, we developed a corpus of non-native speakers' English utterances.

- Categories:

331 Views

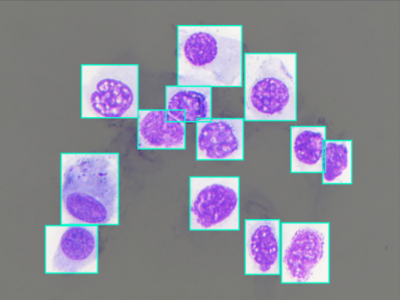

331 ViewsNasal Cytology, or Rhinology, is the subfield of otolaryngology, focused on the microscope observation of samples of the nasal mucosa, aimed to recognize cells of different types, to spot and diagnose ongoing pathologies. Such methodology can claim good accuracy in diagnosing rhinitis and infections, being very cheap and accessible without any instrument more complex than a microscope, even optical ones.

- Categories:

838 Views

838 Views

Visual saliency prediction has been extensively studied in the context of standard dynamic range (SDR) display. Recently, high dynamic range (HDR) display has become popular, since HDR videos can provide the viewers more realistic visual experience than SDR ones. However, current studies on visual saliency of HDR videos, also called HDR saliency, are very few. Therefore, we establish an SDR-HDR Video pair Saliency Dataset (SDR-HDR-VSD) for saliency prediction on both SDR and HDR videos.

- Categories:

400 Views

400 ViewsQiandaoEar22 is a high-quality noise dataset designed for identifying specific ships among multiple underwater acoustic targets using ship-radiated noise. This dataset includes 9 hours and 28 minutes of real-world ship-radiated noise data and 21 hours and 58 minutes of background noise data.

- Categories:

1049 Views

1049 Views