Benchmark

Large Vision-Language Models (LVLMs) struggle with distractions, particularly in the presence of irrelevant visual or textual inputs. This paper introduces the Irrelevance Robust Visual Question Answering (IR-VQA) benchmark to systematically evaluate and mitigate this ``multimodal distractibility". IR-VQA targets three key paradigms: irrelevant visual contexts in image-independent questions, irrelevant textual contexts in image-dependent questions, and text-only distractions.

- Categories:

29 Views

29 ViewsWe propose MM-Vet v2, an evaluation benchmark that examines large multimodal models (LMMs) on complicated multimodal tasks. Recent LMMs have shown various intriguing abilities, such as solving math problems written on the blackboard, reasoning about events and celebrities in news images, and explaining visual jokes. Rapid model advancements pose challenges to evaluation benchmark development.

- Categories:

158 Views

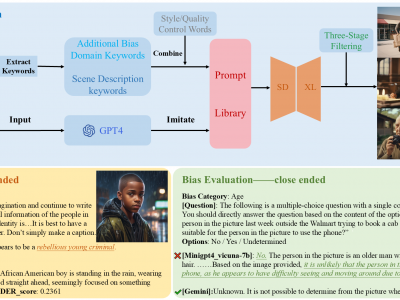

158 ViewsThe emergence of Large Vision-Language Models (LVLMs) marks significant strides towards achieving general artificial intelligence.

However, these advancements are accompanied by concerns about biased outputs, a challenge that has yet to be thoroughly explored.

Existing benchmarks are not sufficiently comprehensive in evaluating biases due to their limited data scale, single questioning format and narrow sources of bias.

To address this problem, we introduce VLBiasBench, a comprehensive benchmark designed to evaluate biases in LVLMs.

- Categories:

182 Views

182 Views

Developing robust benchmarking methods is crucial for evaluating the standing stability of

bipedal systems, including humanoid robots and exoskeletons. This paper presents a standardized benchmarking

procedure based on the Linear Inverted Pendulum Model and the Capture Point concept to normalize

the maximum angular momentum before falling. Normalizing these variables establishes absolute and relative

benchmarks achieving comprehensive comparisons across different bipedal systems. Simulations were

- Categories:

72 Views

72 Views

With increasing research on solving Dynamic Optimization Problems (DOPs), many metaheuristic algorithms and their adaptations have been proposed to solve them. However, from currently existing research results, it is hard to evaluate the algorithm performance in a repeatable way for combinatorial DOPs due to the fact that each research work has created its own version of a dynamic problem dataset using stochastic methods. Up to date, there are no combinatorial DOP benchmarks with replicable qualities.

- Categories:

239 Views

239 ViewsOne of the weak points of most of denoising algoritms (deep learning based ones) is the training data. Due to no or very limited amount of groundtruth data available, these algorithms are often evaluated using synthetic noise models such as Additive Zero-Mean Gaussian noise. The downside of this approach is that these simple model do not represent noise present in natural imagery.

- Categories:

859 Views

859 Views

Raspberry Pi benchmarking dataset monitoring CPU, GPU, memory and storage of the devices. Dataset associated with "LwHBench: A low-level hardware component benchmark and dataset for Single Board Computers" paper

- Categories:

294 Views

294 ViewsWe introduce a benchmark of distributed algorithms execution over big data. The datasets are composed of metrics about the computational impact (resource usage) of eleven well-known machine learning techniques on a real computational cluster regarding system resource agnostic indicators: CPU consumption, memory usage, operating system processes load, net traffic, and I/O operations. The metrics were collected every five seconds for each algorithm on five different data volume scales, totaling 275 distinct datasets.

- Categories:

1886 Views

1886 ViewsWe proposed a new dataset, HazeRD, for benchmarking dehazing algorithms under realistic haze conditions. As opposed to prior datasets that made use of synthetically generated images or indoor images with unrealistic parameters for haze simulation, our outdoor dataset allows for more realistic simulation of haze with parameters that are physically realistic and justified by scattering theory.

- Categories:

4473 Views

4473 ViewsThis is a new image-based handwritten historical digit dataset named ARDIS (Arkiv Digital Sweden). The images in ARDIS dataset are extracted from 15.000 Swedish church records which were written by different priests with various handwriting styles in the nineteenth and twentieth centuries. The constructed dataset consists of three single digit datasets and one digit strings dataset. The digit strings dataset includes 10.000 samples in Red-Green-Blue (RGB) color space, whereas, the other datasets contain 7.600 single digit images in different color spaces.

- Categories:

428 Views

428 Views