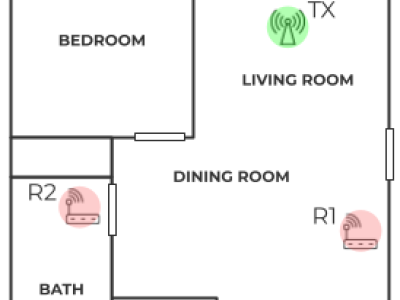

To assist individuals in sports activities is one of the emerging areas of wearable applications. Among various kinds of sports, detecting tennis strokes faces unique challenges. we propose an approach to detect three tennis strokes (backhand, forehand, serve) by utilizing a smartwatch. In our method, the smartwatch is part of a wireless network in which inertial data file is transferred to a laptop where data prepossessing and classification is performed. The data file contains acceleration and angular velocity data of the 3D accelerometer and gyroscope.

- Categories: