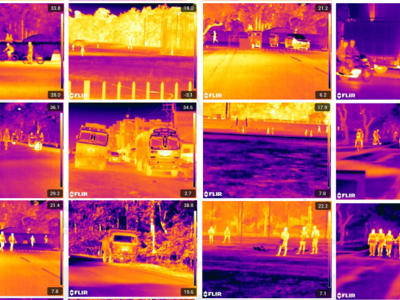

ITDAV-25 (Indian Thermal Dataset for Autonomous Vehicles), a thermal image dataset specifically curated to advance research in Advanced Driver Assistance Systems (ADAS), particularly for environments characterized by low visibility, night-time conditions, and inclement weather. The dataset comprises of 13,688 raw thermal images, collected without any synthetic augmentation techniques.

- Categories: