Gait

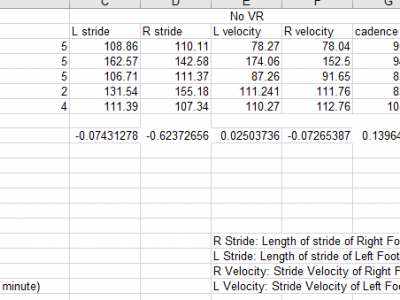

The dataset gives information about the different gait metrics such as stride length for left and right foot, stride velocity for left and right foot and cadence collected from human subjects

in a controlled environment in the presence of VR(virtual reality) scenes such as positve, negative and neutral. The PHQ-9 score of the subjects is collected and correlated with

the gait score. Decriptive statitics such as median are also collected for the difference in the gait values of specific VR environments.

- Categories:

214 Views

214 ViewsThis dataset consists of inertial, force, color, and LiDAR data collected from a novel sensor system. The system comprises three Inertial Measurement Units (IMUs) positioned on the waist and atop each foot, a color sensor on each outer foot, a LiDAR on the back of each shank, and a custom Force-Sensing Resistor (FSR) insole featuring 13 FSRs in each shoe. 20 participants wore this sensor system whilst performing 38 combinations of 11 activities on 9 different terrains, totaling over 7.8 hours of data.

- Categories:

413 Views

413 Views

Background

Effective retraining of foot elevation and forward propulsion is essential in stroke survivors’ gait rehabilitation. However, home-based training often lacks valuable feedback. eHealth solutions based on inertial measurement units (IMUs) could offer real-time feedback on fundamental gait characteristics. This study aimed to investigate the effect of providing real-time feedback through an eHealth solution on foot strike angle (FSA) and forward propulsion in people with stroke.

- Categories:

31 Views

31 ViewsAn understanding of local walking context plays an important role in the analysis of gait in humans and in the high level control systems of robotic prostheses. Laboratory analysis on its own can constrain the ability of researchers to properly assess clinical gait in patients and robotic prostheses to function well in many contexts, therefore study in diverse walking environments is warranted. A ground-truth understanding of the walking terrain is traditionally identified from simple visual data.

- Categories:

314 Views

314 ViewsWe provide a large benchmark dataset consisting of about: 3.5 million keystroke events; 57.1 million data-points for accelerometer and gyroscope each; and 1.7 million data-points for swipes. Data was collected between April 2017 and June 2017 after the required IRB approval. Data from 117 participants, in a session lasting between 2 to 2.5 hours each, performing multiple activities such as: typing (free and fixed text), gait (walking, upstairs and downstairs) and swiping activities while using desktop, phone and tablet is shared.

- Categories:

11057 Views

11057 Views