Computer Vision

This dataset extends the Urban Semantic 3D (US3D) dataset developed and first released for the 2019 IEEE GRSS Data Fusion Contest (DFC19). We provide additional geographic tiles to supplement the DFC19 training data and also new data for each tile to enable training and validation of models to predict geocentric pose, defined as an object's height above ground and orientation with respect to gravity. We also add to the DFC19 data from Jacksonville, Florida and Omaha, Nebraska with new geographic tiles from Atlanta, Georgia.

- Categories:

10914 Views

10914 ViewsCautionary traffic signs are of immense significance to traffic safety. In this study, a robust and optimal real-time approach to recognize the Indian Cautionary Traffic Signs(ICTS) is proposed. ICTS are all triangles with a white backdrop, a red border, and a black pattern. A dataset of 34,000 real-time images has been acquired under various environmental conditions and categorized into 40 distinct classes. Pre-processing techniques are used to transform RGB images to Gray-scale images and enhance contrast in images for superior performance.

- Categories:

10330 Views

10330 ViewsSolving the external perception problem for autonomous vehicles and driver-assistance systems requires accurate and robust driving scene perception in both regularly-occurring driving scenarios (termed “common cases”) and rare outlier driving scenarios (termed “edge cases”). In order to develop and evaluate driving scene perception models at scale, and more importantly, covering potential edge cases from the real world, we take advantage of the MIT-AVT Clustered Driving Scene Dataset and build a subset for the semantic scene segmentation task.

- Categories:

4772 Views

4772 ViewsSemantic scene segmentation has primarily been addressed by forming representations of single images both with supervised and unsupervised methods. The problem of semantic segmentation in dynamic scenes has begun to recently receive attention with video object segmentation approaches. What is not known is how much extra information the temporal dynamics of the visual scene carries that is complimentary to the information available in the individual frames of the video.

- Categories:

8651 Views

8651 ViewsSynthetic Aperture Radar (SAR) images can be extensively informative owing to their resolution and availability. However, the removal of speckle-noise from these requires several pre-processing steps. In recent years, deep learning-based techniques have brought significant improvement in the domain of denoising and image restoration. However, further research has been hampered by the lack of availability of data suitable for training deep neural network-based systems. With this paper, we propose a standard synthetic data set for the training of speckle reduction algorithms.

- Categories:

3910 Views

3910 Views

This is the data for paper "Environmental Context Prediction for Lower Limb Prostheses with Uncertainty Quantification" published on IEEE Transactions on Automation Science and Engineering, 2020. DOI: 10.1109/TASE.2020.2993399. For more details, please refer to https://research.ece.ncsu.edu/aros/paper-tase2020-lowerlimb.

- Categories:

1184 Views

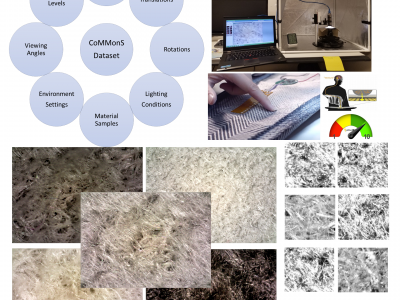

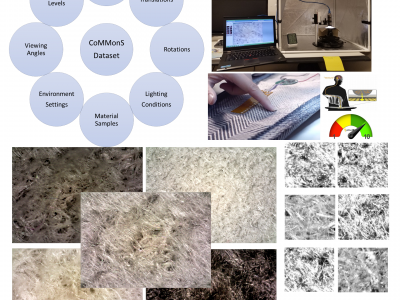

1184 ViewsAs one of the research directions at OLIVES Lab @ Georgia Tech, we focus on recognizing textures and materials in real-world images, which plays an important role in object recognition and scene understanding. Aiming at describing objects or scenes with more detailed information, we explore how to computationally characterize apparent or latent properties (e.g. surface smoothness) of materials, i.e., computational material characterization, which moves a step further beyond material recognition.

- Categories:

962 Views

962 ViewsAs one of the research directions at OLIVES Lab @ Georgia Tech, we focus on recognizing textures and materials in real-world images, which plays an important role in object recognition and scene understanding. Aiming at describing objects or scenes with more detailed information, we explore how to computationally characterize apparent or latent properties (e.g. surface smoothness) of materials, i.e., computational material characterization, which moves a step further beyond material recognition.

- Categories:

278 Views

278 Views

This aerial image dataset consists of more than 22,000 independent buildings extracted from aerial images with 0.0075 m spatial resolution and 450 km^2 covering in Christchurch, New Zealand. The most parts of aerial images are down-sampled to 0.3 m ground resolution and cropped into 8,189 non-overlapping tiles with 512* 512. These tiles make up the whole dataset. They are split into three parts: 4,736 tiles for training, 1,036 tiles for validation and 2,416 tiles for testing.

- Categories:

383 Views

383 ViewsThis Dataset contains "Pristine" and "Distorted" videos recorded in different places. The

distortions with which the videos were recorded are: "Focus", "Exposure" and "Focus + Exposure".

Those three with low (1), medium (2) and high (3) levels, forming a total of 10 conditions

(including Pristine videos). In addition, distorted videos were exported in three different

qualities according to the H.264 compression format used in the DIGIFORT software, which were:

High Quality (HQ, H.264 at 100%), Medium Quality (MQ, H.264 at 75%) and Low Quality

- Categories:

1186 Views

1186 Views