MIT DriveSeg (Semi-auto) Dataset

- Citation Author(s):

-

Li Ding (MIT)Michael Glazer (MIT)Jack Terwilliger (MIT)Bryan Reimer (MIT)Lex Fridman (MIT)

- Submitted by:

- Meng Wang

- Last updated:

- DOI:

- 10.21227/nb3n-kk46

- Data Format:

4927 views

4927 views

- Categories:

- Keywords:

Abstract

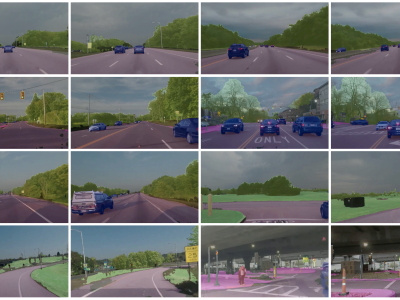

Solving the external perception problem for autonomous vehicles and driver-assistance systems requires accurate and robust driving scene perception in both regularly-occurring driving scenarios (termed “common cases”) and rare outlier driving scenarios (termed “edge cases”). In order to develop and evaluate driving scene perception models at scale, and more importantly, covering potential edge cases from the real world, we take advantage of the MIT-AVT Clustered Driving Scene Dataset and build a subset for the semantic scene segmentation task. We hereby present the MIT DriveSeg (Semi-auto) Dataset: a large-scale video driving scene dataset, which contains 20,100 video frames with pixel-wise semantic annotation. We propose semi-automatic annotation approaches leveraging both manual and computational efforts to annotate the data more efficiently and at lower cost than manual annotation.

Instructions:

MIT DriveSeg (Semi-auto) Dataset is a set of forward facing frame-by-frame pixel level semantic labeled dataset (coarsely annotated through a novel semiautomatic annotation approach) captured from moving vehicles driving in a range of real world scenarios drawn from MIT Advanced Vehicle Technology (AVT) Consortium data.

Technical Summary

Video data - Sixty seven 10 second 720P (1280x720) 30 fps videos (20,100 frames)

Class definitions (12) - vehicle, pedestrian, road, sidewalk, bicycle, motorcycle, building, terrain (horizontal vegetation), vegetation (vertical vegetation), pole, traffic light, and traffic sign

Technical Specifications, Open Source Licensing and Citation Information

Ding, L., Glazer, M., Terwilliger, J., Reimer, B. & Fridman, L. (2020). MIT DriveSeg (Semi-auto) Dataset: Large-scale Semi-automated Annotation of Semantic Driving Scenes. Massachusetts Institute of Technology AgeLab Technical Report 2020-2, Cambridge, MA. (pdf)

Ding, L., Terwilliger, J., Sherony, R., Reimer, B. & Fridman, L. (2020). MIT DriveSeg (Manual) Dataset. IEEE Dataport. DOI: 10.21227/nb3n-kk46.

Attribution and Contact Information

This work was done in collaboration with the Toyota Collaborative Safety Research Center (CSRC). For more information, click here.

For any questions related to this dataset or requests to remove identifying information please contact driveseg@mit.edu.