Datasets

Open Access

VibTac-12: Texture Dataset Collected by Tactile Sensors

- Citation Author(s):

- Submitted by:

- Ahmad Patooghy

- Last updated:

- Tue, 05/17/2022 - 22:21

- DOI:

- 10.21227/kwsy-x398

- Data Format:

- Link to Paper:

- License:

2971 Views

2971 Views- Categories:

- Keywords:

Abstract

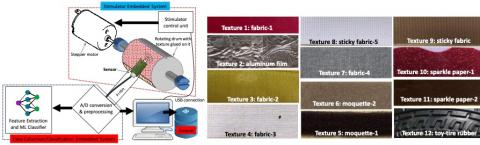

Tactile perception of the material properties in real-time using tiny embedded systems is a challenging task and of grave importance for dexterous object manipulation such as robotics, prosthetics and augmented reality [1-4] . As the psychophysical dimensions of the material properties cover a wide range of percepts, embedded tactile perception systems require efficient signal feature extraction and classification techniques to process signals collected by tactile sensors in real-time. We have limited our study to the machine perception/discrimination of various textures that can be sensed by sensors attached to a probe/stick touching the material surfaces via a single touch point. For this purpose, we developed two embedded systems, one that served as a vibrotactile stimulator system and one that recorded and classified the vibrotactile signals collected by its sensors. As the probe rubs against the surface of the textured material on the stimulator, the sensors attached to the probe capture the vibrotactile signals for real-time classification. The probe is 3D printed with high printing density so that it transmits the vibrations at its tip without distortion. Our study has been submitted under the title: “An Embedded System for Collection and Real-time Classification of a Tactile Dataset”, in which the data has been further elaborated and analyzed using the proposed signal feature extraction method and the Fourier transform as input to machine learning classifiers. We performed experiments both offline and on the proposed embedded platform in real-time. Based on the limited memory and performance budget of the embedded system used in this study, we have chosen the 3-dimensional accelerometer sensor (MMA-7660 from NXP Company [5]) and an electret condenser microphone (CMA-4544PF-W from CUI Company [6]) as the sources of recordings in our tactile dataset. We have used commercial off-the-shelf embedded boards and electrical components (AVR-based embedded boards, stepper motors, etc.) as well as our own designed and 3D printed mechanical components (including the rotating drum glued with different texture strips). The collected tactile dataset (VibTac-12) has 12 texture classes, including sandpapers of various grits, Velcro strips with various thicknesses, aluminum foil, and rubber bands of various stickiness. For each texture, 20 seconds of recordings are collected (corresponding to nearly five rotations of the drum). We used the sampling rate of 200Hz for the accelerometer to collect the vibration data and to 8kHz for the microphone to collect the sound data. We used the dataset to show that low-cost, highly accurate, and real-time tactile texture classification can be achieved on embedded systems using an ensemble of sensors, efficient feature extraction methods [7], and simple machine learning classifiers [8].

1- J. C. Gwilliam, Z. Pezzementi, E. Jantho, A. M. Okamura, and S. Hsiao, “Human vs. robotic tactile sensing: Detecting lumps in soft tissue,” in 2010 IEEE Haptics Symposium, March 2010, pp. 21–28.

2- S. Okamoto, H. Nagano, and HN. Ho, “Psychophysical Dimensions of Material Perception and Methods to Specify Textural Space,” In: Kajimoto H., Saga S., Konyo M. (eds) Pervasive Haptics. Tokyo: Springer Japan, 2016.

3- W. Duchaine, “Why tactile intelligence is the future of robotic grasping,” in IEEE Spectrum Automaton. IEEE, 2016.

4- A. Schmitz, Y. Bansho, K. Noda, H. Iwata, T. Ogata, and S. Sugano, “Tactile object recognition using deep learning and dropout,” in 2014 IEEE-RAS International Conference on Humanoid Robots. IEEE, 2014, pp. 1044–1050.

5- “3-axis orientation/motion detection sensor,” NXP Semiconductor, Document Number: MMA7660FC, 2012. [Online]. Available: https://www.nxp.com/docs/en/data-sheet/MMA7660FC.pdf

6- “Electret condenser microphone sensor,” CUI Devices, Document Number: CMA-4544PF-W, 2013. [Online]. Available: https://www.mouser.com/datasheet/2/670/cma-4544pf-w-1309465.pdf

7- E. Alpaydin, “Introduction to machine learning, third edition,” The MIT Press, Cambridge, 2014

8- M. Fernandez-Delgado, E. Cernadas, S. Barro, and D. Amorim, “Do we need hundreds of classifiers to solve real world classification problems?” Journal of Machine Learning Research, vol. 15, pp. 3133–3181, 2014. [Online]. Available: http://jmlr.org/papers/v15/delgado14a.html

There are four CSV files (X, Y, Z, and S) in the dataset corresponding to the sensor recordings. The 3-dimensional accelerometer sensor recordings are denoted by X, Y, and Z, respectively. The sound recordings from the electret condenser microphone are denoted by S. As there are 12 classes in the dataset, there is one line for each class in the CSV files. For each texture, 20 seconds of recordings are collected. Therefore, each line in the X, Y, Z files has 4,000 samples (20 sec x 200Hz sampling rate) and each line in the S file has 160,000 samples (20 sec x 8 kHz). The training and test sets for the machine learning classifiers can be created by snipping short frames out of these recordings and applying signal feature extraction. For example, the first 400 columns of the 12th row of X.csv and the first 16,000 columns of the 12th row of S.csv both correspond to the first 2 seconds of the recordings for texture class 12. The Python programs we have developed will be made available upon request.

Please cite the dataset and accompanying paper if you use this dataset:

- Kursun, O. and Patooghy, A. (2020) "An Embedded System for Collection and Real-time Classification of a Tactile Dataset", IEEE Access (accepted for publication).

- Kursun, O. and Patooghy, A. (2020) "Texture Dataset Collected by Tactile Sensors", IEEE Dataport, 2020.

Dataset Files

dataset.zip (9.63 MB)

Open Access dataset files are accessible to all logged in users. Don't have a login? Create a free IEEE account. IEEE Membership is not required.