Image Processing

STP dataset is a dataset for Arabic text detection on traffic panels in the wild. It was collected from Tunisia in “Sfax” city, the second largest Tunisian city after the capital. A total of 506 images were gathered through manual collection one by one, with each image energizing Arabic text detection challenges in natural scene images according to real existing complexity of 15 different routes in addition to ring roads, roundabouts, intersections, airport and highways.

- Categories:

230 Views

230 ViewsThis synthetic dataset or phantom consists of 3 jpg format databases, in the two-dimensional (2-D) domain, which are identified as follows:

DB1: Ground Truth

DB2: Speckle noise with zero mean and 0.005 standard deviation

DB3: Speckle noise with zero mean and 0.05 standard deviation

- Categories:

110 Views

110 ViewsThe paper presented by Samar Mahmoud; and Yasmine Arafaf et, al a novel dataset called the "Abnormal High-Density Crowd Dataset," addresses the challenge of anomaly detection in crowded environments, particularly focusing on high-density crowds—an area that has received limited exploration in computer vision and crowd behaviour understanding. The dataset is introduced with considerations for privacy, annotation accuracy, and preprocessing.

- Categories:

265 Views

265 Views

This work presents a large-scale three-fold annotated, low-cost microscopy image dataset of potato tubers for plant cell analysis in deep learning (DL) framework which has huge potential in the advancement of plant cell biology research. Indeed, low-cost microscopes coupled with new-generation smartphones could open new aspects in DL-based microscopy image analysis, which offers several benefits including portability, ease of use, and maintenance.

- Categories:

235 Views

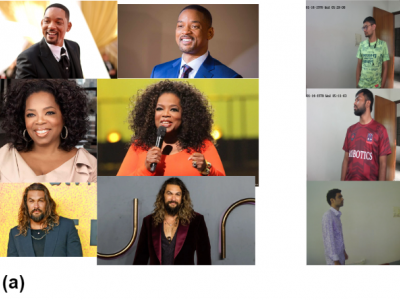

235 ViewsFaceEngine is a face recognition database for using in CCTV based video surveillance systems. This dataset contains high-resolution face images of around 500 celebrities. It also contains images captured by the CCTV camera. Against each person folder, there are more than 10 images for that person. Face features can be extracted from this database. Also, there are test videos in the dataset that can be used to test the system. Each unique ID contains high resolution images that might help CCTV surveillance system test or training face detection model.

- Categories:

817 Views

817 ViewsLow-light images and video footage often exhibit issues due to the interplay of various parameters such as aperture, shutter speed, and ISO settings. These interactions can lead to distortions, especially in extreme lighting conditions. This distortion is primarily caused by the inverse relationship between decreasing light intensity and increasing photon noise, which gets amplified with higher sensor gain. Additionally, secondary characteristics like white balance and color effects can also be adversely affected and may require post-processing correction.

- Categories:

2073 Views

2073 ViewsThe dataset consists of six .mat files containing three surveillance video test sequences, Hall_qcif_330 (Hall, 330 frames), PETS2009_S1L1-View_001 (PETS, 100 frames), and Crosswalk (CW, 270 frames), and the corresponding background image for three videos (Only the data of each video's gray channel component). Hall is shot indoors and disturbed by noise, PETS is shot outdoors with less noise, and CW is shot outdoors with heavy noise interference. Hall and PETS are two foreground-sparse videos with small objects. CW is a foreground-dense video with dramatic changes in sparsity. All the video

- Categories:

308 Views

308 ViewsThe following videoes use the video streaming completion model, which combines static and dynamic information for real-time processing. The proposed model is solved using the alternating direction method of multipliers (ADMM), and using MATLAB for solution recovery.

Gray video suzie: This video is restored in the case of the missing rates set to 70%, 80%, 90%, respectively

Color Video Hall: This video is restored in the case of the missing rates set to 70%, 80%, and 90%, respectively

- Categories:

86 Views

86 Views

Multi-modality image fusion aims to combine diverse images into a single image that captures important targets, intricate texture details, and enables advanced visual tasks. Existing fusion methods often overlook the complementarity of difffferent modalities by treating source images uniformly and extracting similar features. This study introduces a distributed optimization model that leverages a collection of images and their signifificant features stored in distributed storage. To solve this model, we employ the distributed Alternating Direction Method of Multipliers (ADMM) algorithm.

- Categories:

29 Views

29 Views

The "Multi-modal Sentiment Analysis Dataset for Urdu Language Opinion Videos" is a valuable resource aimed at advancing research in sentiment analysis, natural language processing, and multimedia content understanding. This dataset is specifically curated to cater to the unique context of Urdu language opinion videos, a dynamic and influential content category in the digital landscape.

Dataset Description:

- Categories:

283 Views

283 Views