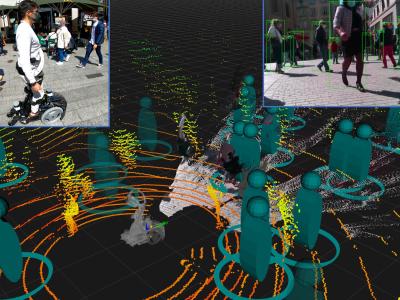

3D point cloud and RGBD of pedestrians in robot crowd navigation: detection and tracking

- Citation Author(s):

-

Yujie He (EPFL)David Gonon (EPFL)Lukas Huber (EPFL)

- Submitted by:

- Diego Felipe Paez Granados

- Last updated:

- DOI:

- 10.21227/ak77-d722

- Data Format:

- Links:

2611 views

2611 views

- Categories:

- Keywords:

Abstract

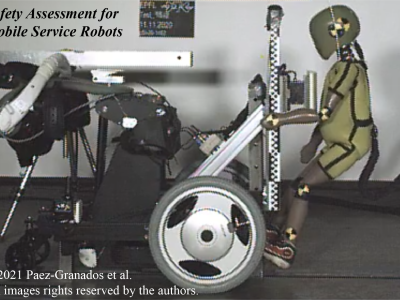

The current dataset – crowdbot – presents outdoor pedestrian tracking from onboard sensors on a personal mobility robot navigating in crowds. The robot Qolo, a personal mobility vehicle for people with lower-body impairments was equipped with a reactive navigation control operating in shared-control or autonomous mode when navigating on three different streets of the city of Lausanne, Switzerland during farmer’s market days and Christmas market. Full Dataset here: DOI:10.21227/ak77-d722

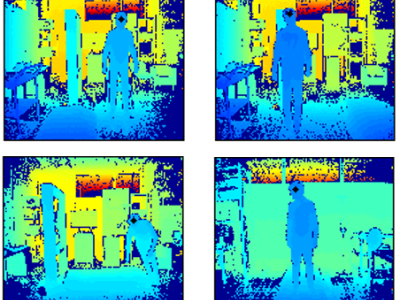

The dataset includes point clouds from a frontal and rear 3D LIDAR (Velodyne VLP-16) at 20 Hz, and a frontal facing RGBD camera (Real Sense D435). The data comprise over 250k frames of recordings in crowds from light densities of 0.1 ppsm to dense crowds of over 1.0 ppsm. We provide the robot state of pose, velocity, and contact sensing from Force/Torque sensor (Botasys Rokubi 2.0).

We provide the metadata of people detection and tracking from onboard real-time sensing (DrSPAAM detector), people class labelled 3D point cloud (AB3DMOT), estimated crowd density, proximity to the robot, and path efficiency of the robot controller (time to goal, path length, and virtual collisions).

One recording of the dataset includes approximately 120s of data in rosbag format for Qolo’s sensors, as well as, data in npy format for easy read and access. All code for meta data processing and extraction of the raw files is provided open access: epfl-lasa/crowdbot-evaluation-tools

- 250k frames of data – over 200 minutes of recordings

- Qolo Robot state: localization – pose – velocity – controller state

- 2 x 3D point cloud (VLP-16)

- 1x RGBD image and depth camera (Realsense D435)

- 3 x people detectors (DrSPAAM, Yolo)

- 1x Tracker

- Contact Forces (Botasys Rokubi 2.0)

Note: current data do not contain the RGBD images as they are being processed for face blurring. Nontheless, Yolo output of people detections are included.

The rgbd image with bouding boxes will be uploaded as soon as the process is completed.

Cite as: Paez-Granados D., Hen Y., Gonon D., Huber L., & Billard A., (2021), “3D point cloud and RGBD of pedestrians in robot crowd navigation: detection and tracking.”, Dec. 2021. IEEE Dataport, doi: https://dx.doi.org/10.21227/ak77-d722.

Instructions:

The used file structure is as follows:

docs/: documentationqolo/: codespace for crowdbot evaluationnotebook/: example notebooks for demosh_scripts/: shell scripts to execute pipelines for extracting source data, applying algorithms, and evaluating with different metrics

Dataset Files

- NPY - Full dataset with all LIDAR (Size: 58.3 GB)

- 1203_shared_control_processed.zip (Size: 173.29 MB)

- 1203_shared_control_lidars.zip (Size: 7.16 GB)

- Example-1: Metadata from 13 recordings of shared control navigation on a dense crowd (1 ppsm) (Size: 1.33 GB)

- Example-1:Raw 3D LIDAR (x2) data (Size: 31.97 MB)

- Example-2: Raw 3D LIDAR (x2) data (Size: 14.14 MB)

- Example-2: Metadata from 2 recordings during manual driving in a dense crowd (Size: 10.5 GB)

- Onboard_RGBD_video (x2) (Size: 7.08 GB)

- rosbags_25_03_rds no_rbgd (Size: 28.74 GB)

- rosbags_25_03_sc no_rbgd (Size: 13.52 GB)

- rosbags_27_03_sc no_rbgd (Size: 18.84 GB)

- rosbags_10_04_mds no_rbgd (Size: 11.84 GB)

- rosbags_10_04_rds no_rbgd (Size: 7.99 GB)

- rosbags_10_04_sc no_rbgd (Size: 6.79 GB)

- rosbags_24_04_mds no_rbgd (Size: 7.99 GB)

- rosbags_24_04_rds no_rbgd (Size: 1.61 GB)

- rosbags_24_04_sc no_rbgd (Size: 14.04 GB)

- rosbags_03_12_manual no_rbgd (Size: 8.98 GB)

- rosbags_03_12_sc no_rbgd (Size: 27.42 GB)

- rosbags_03_12_manual-rgbd_defaced (Size: 36.83 GB)

- rosbags_10_04_sc-rgbd_defaced (Size: 15.29 GB)

- rosbags_10_04_mds-rgbd_defaced (Size: 22.85 GB)

- rosbags_25_03_sc-rgbd_defaced (Size: 17.44 GB)