Datasets

Standard Dataset

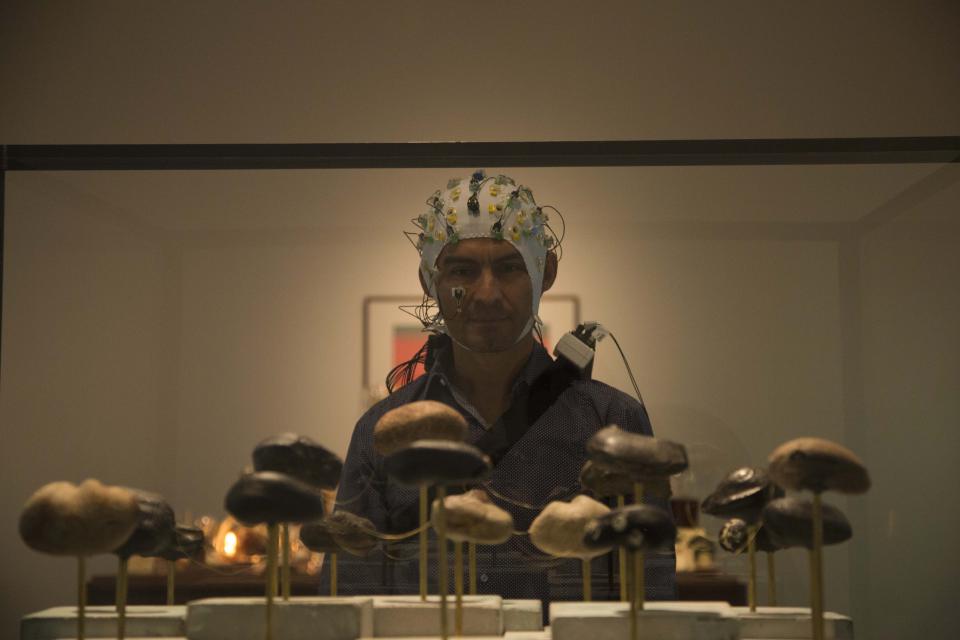

Mobile EEG Recordings in an Art Museum Setting

- Citation Author(s):

- Jesus G. Cruz-Garza, Justin A Brantley, Sho Nakagome, Kim Kontson, Dario Robleto, Jose L. Contreras-Vidal

- Submitted by:

- Justin Brantley

- Last updated:

- Fri, 11/22/2024 - 10:23

- DOI:

- 10.21227/H2TM00

- Data Format:

- Links:

- License:

4058 Views

4058 Views- Categories:

- Keywords:

Abstract

Recent advances in scalp electroencephalography (EEG) as a neuroimaging tool have now allowed researchers to overcome technical challenges and movement restrictions typical in traditional neuroimaging studies. Fortunately, recent mobile EEG devices have enabled studies involving cognition and motor control in natural environments that require mobility, such as during art perception and production in a museum setting, and during locomotion tasks. Nevertheless, datasets that include brain activity (EEG) acquired ‘in action and in context’ in complex real settings are not readily available to the scientific community. To address this need, we acquired data from 431 participants (208 males/223 females; age range 6-81 years) using five mobile EEG systems (four dry and one gel-based devices) as the volunteers were behaving freely as they viewed the exhibit ‘The Boundary of Life is Quietly Crossed’ by Dario Robleto (Cruz-Garza et al., 2017; Kontson et al., 2015). In addition, indoor positioning data, demographic data, and questionnaire data about art preference and other variables were also acquired. A total of 207 subjects completed the questionnaire. The experiment took place at the Menil Collection in Houston, Texas, USA over 22 weeks. Due to technical issues resulting from the unconstrained and unsupervised nature of the study (e.g., poor wireless communication, file corruption, sensor disconnection, low system battery, etc.), the de-identified multimodal dataset contains EEG data from only 134 individuals (77 males/53 females; age range 15-77 years) that participated in the art viewing task (Cruz-Garza et al., 2017; Kontson et al., 2015). Using these datasets, we evaluated the signal quality and usability of each of the devices in a natural environment (Cruz-Garza et al., 2017), and identified neural signatures within each of the datasets related to the aesthetic experience (Kontson et al., 2015). The unique dataset will provide researchers with the opportunity to further investigate the brain responses of free-behaving individuals in complex natural settings.

corresponding author: Contreras-Vidal, Jose L. (jlcontreras-vidal@uh.edu)

Relevant Literature

The available data sets are accompanied by the following publications:

- Cruz-Garza JG, Brantley JA, Nakagome S, Kontson K, Megjhani M, Robleto D, Contreras-Vidal JL. Deployment of Mobile EEG Technology in an Art Museum Setting: Evaluation of Signal Quality and Usability. Front Hum Neurosci. 2017 Nov 10;11:527. doi: 10.3389/fnhum.2017.00527. PubMed PMID: 29176943; PubMed Central PMCID: PMC5686057. (link)

- Kontson KL, Megjhani M, Brantley JA, Cruz-Garza JG, Nakagome S, Robleto D, White M, Civillico E, Contreras-Vidal JL. Your Brain on Art: Emergent Cortical Dynamics During Aesthetic Experiences. Front Hum Neurosci. 2015 Nov 18;9:626. doi: 10.3389/fnhum.2015.00626. Erratum in: Front Hum Neurosci. 2015;9:684. PubMed PMID: 26635579; PubMed Central PMCID: PMC4649259. (link)

These publications are a primary resource for information regarding the methods of data curation, participant information, experimental setup and tasks, and a detailed description of each of the devices used in the study. These studies provide a detailed description of the devices and the various types of artifacts that were characteristic to each. The studies discuss the usability and limitations of each system in a mobile setting, and they present an evaluation of the distinct patterns within the spectral content of each of the devices.

Experiment Information

The experiments and anonymous informed consent were approved by the University of Houston’s Institutional Review Board. Adults provided verbal informed consent, while children and their parents/guardian were both required to provide verbal informed consent for participation. The data were collected during weekly experiments held at The Menil Collection in Houston, TX over the course of 22 weeks. Visitors from the museum were invited to participate by donning an EEG cap and exploring Dario Robleto’s installation, Boundary of Life is Quietly Crossed (link). A total of 431 museum visitors participated in the experiments (208 males/223 females; age range 6-81 years). Among these, 134 (77 males/53 females; age range 15-77 years) had reliable EEG with appropriate tracking information for segmenting the data by art piece. The methods for tracking and annotating the subjects’ data during the experiment is described in detail in Cruz-Garza et al1.

Data Summary

The EEG data are in .MAT format and are archived in folders for each of the headsets used. There is a main folder with five subfolders with the following titles: BPD, BPG, M4S, M32, and SS. Each folder contains all the files for that device. All EEG files are named with the following naming convention:

(headset)(session #)_(subject #)_(art piece).mat,

where (headset) is the headset name (BPD, BPG, M4S, M32A,B,D, SS), (session #) is the session number (1,2,3,…,22), (subject #) is the subject number fore that session (1,2,…), and (art piece) is the name of the art piece being viewed. The art pieces are titled base, p1, p2, p4, p5, p6, and p8, where base corresponds to the seated baseline trials and the others correspond to each of the pieces (shown in the included image ExperimentLayout.tif and in the relevant literature). For the M32 files, the headset name is either M32A, M32B, or M32D.

Each of the EEG files contains a structure with three fields (variable name: EEG):

- EEG.data: M×N matrix containing the raw EEG data. The rows are the M channels corresponding to the channel locations and the columns are the N sample points

- EEG.srate: The sampling rate of the system given in samples/second (Hz)

- EEG.chanlocs: M dimensional structure where each dimension corresponds to the location information for each of the M channels

- EEG.age: Age of the subjects in years

- EEG.gender: Gender of subject (M: male; F: female)

- EEG.race: Self-reported race based on the definitions provided by the U.S. Census Bureu

- EEG.ethnicity: Self-reported ehtnicity based on the definitions provided by the U.S. Census Bureu

In the case of the BPG files, there is an extra field containing electrooculography data.

- EEG.eogdata: 4×N matrix containing the raw EOG data, where the rows correspond to the electrodes in the following locations: (1) left temple, (2) right temple, (3) above the right eye, and (4) below the right eye

The complete specifications for each of the five headsets are provided in the included TableOfSpecifications.pdf and shown in Table 1 of Cruz-Garza et al, 2017. Finally, the main folder includes a spreadsheet containing the demographic and the responses to the questionnaire (the questionnaire is included as a .pdf).

Funding

This work was partially supported by a cross-cutting seed grant from the Cullen College of Engineering at the University of Houston and the National Science Foundation Award NCS-FO 1533691.

Acknowledgements

The authors would like to thank all the members from the Laboratory for Non-Invasive Brain Machine Interfaces at the University of Houston for their assistance in the acquisition of data at the Menil Collection. The authors would also like to thank Sohan Gadkari and Dakota Grusak for their assistance in the data analysis, and Curator Michelle White for facilitating the study at the Menil Collection museum.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest. The EEG systems were loaned at no cost to the University of Houston for the duration of the experiment. The respective companies were contacted to ensure the correct operation of the headsets. Brain Vision LLC (Morrisville, NC) recently joined as an in-kind member of the IUCRC BRAIN University of Houston Site (PI Contreras-Vidal).

Disclaimer

The mention of commercial products, their sources, or their use in connection with material reported herein is not to be construed as an actual or implied endorsement of such products by the Department of Health and Human Services.

Comments

EOG DATASET

thank you

Greetings

Are there any other sources/platforms where the "Mobile EEG Recordings in an Art Museum Setting" is held?