Multimodal

Crowdfunding campaigns frequently fail to reach their funding goals, posing a significant challenge for project creators. To address this issue and empower future crowdfunding stakeholders, accurate prediction models are essential. This study evaluates the relative significance of diverse modalities (visual, audio, and text) in predicting campaign success.

- Categories:

433 Views

433 ViewsThis research introduces the Open Seizure Database and Toolkit as a novel, publicly accessible resource designed to advance non-electroencephalogram seizure detection research. This paper highlights the scarcity of resources in the non-electroencephalogram domain and establishes the Open Seizure Database as the first openly accessible database containing multimodal sensor data from 49 participants in real-world, in-home environments.

- Categories:

1800 Views

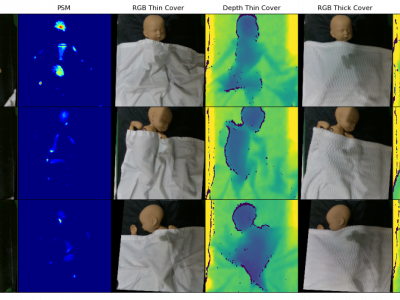

1800 ViewsSimultaneously-collected multimodal Mannequin Lying pose (SMaL) dataset is a infant pose dataset based on a posable mannequin. The SMaL dataset contains a set of 300 unique poses under three cover conditions using three sensor modalities: color imaging, depth sensing, and pressure sensing. It represents the first multimodal dataset for infant pose estimation and the first dataset to explore under the cover pose estimation for infants.

- Categories:

561 Views

561 Views