Simultaneously-collected Multimodal Mannequin Lying Pose (SMaL)

- Citation Author(s):

- Submitted by:

- Daniel Kyrollos

- Last updated:

- DOI:

- 10.21227/73ne-y825

- Data Format:

563 views

563 views

- Categories:

- Keywords:

Abstract

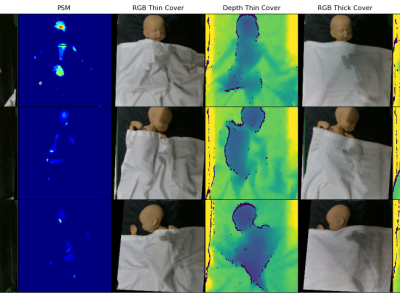

Simultaneously-collected multimodal Mannequin Lying pose (SMaL) dataset is a infant pose dataset based on a posable mannequin. The SMaL dataset contains a set of 300 unique poses under three cover conditions using three sensor modalities: color imaging, depth sensing, and pressure sensing. It represents the first multimodal dataset for infant pose estimation and the first dataset to explore under the cover pose estimation for infants.

Instructions:

The dataset is split into five folds of 60 poses. Three folds were used for training, one as validation and one for testing.

For each training and testing round, there is a folder containing the relevant train, validation and testing images.

Each folder contains 5 files:

1) uncovered.npy, cover1.npy,cover2.npy: These are numpy arrays with shape n x 224 x 224 x 2. The first dimension represents the number of pose samples. The last dimension is the channels with depth being the first and pressure being the second. The images are 224 x 224 in size. Each file contains the corresponding cover condition, with cover1 being the thin cover and cover2 being the thick.

2) joints.npy: This is a numpy array with shape n x 14 x 2. The first dimension represents the number of pose samples. The second represents the joint in this order:

"R_Ankle", "R_Knee", "R_Hip", "L_Hip", "L_Knee", "L_Ankle", "R_Wrist", "R_Elbow", "R_Shoulder", "L_Shoulder","L_Elbow", "L_Wrist", "Thorax", "Head"

The last dimension is for the x,y coordinate. These are normalized locations from 0-1.

3) labels.npy: this is a numpy array with shape n x 1. This is the label for each pose type (supine, left side, right side)