Datasets

Standard Dataset

StairNet: A Computer Vision Dataset for Stair Recognition

- Citation Author(s):

- Submitted by:

- Brokoslaw Laschowski

- Last updated:

- Fri, 11/29/2024 - 06:51

- DOI:

- 10.21227/12jm-e336

- Links:

- License:

3141 Views

3141 Views- Categories:

- Keywords:

Abstract

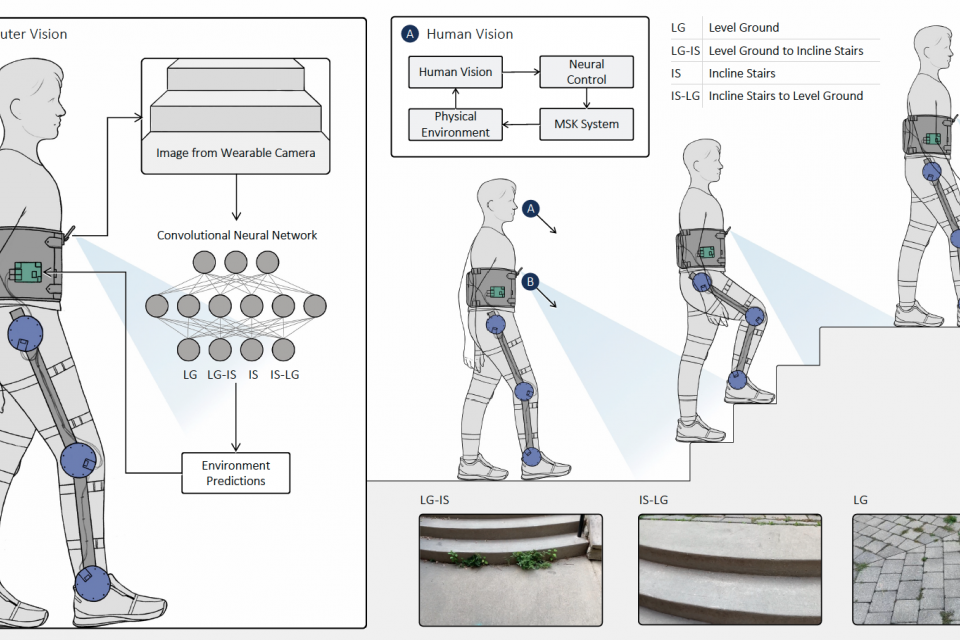

Vision is important for transitions between different locomotor controllers (e.g., level-ground walking to stair ascent) by sensing the environment prior to physical interactions. Here we developed StairNet to support the development and comparison of deep learning models for visual recognition of stairs. The dataset builds on ExoNet – the largest open-source dataset of egocentric images of real-world walking environments. StairNet contains ~515,000 labelled images from six of the twelve original ExoNet classes. Images were reclassified using new definitions to increase the accuracy of the cutoff points between classes. The dataset was manually parsed several times during annotation to reduce misclassification errors and remove images with large obstructions. StairNet can support the development of next-generation deep learning models for visual perception and environment-adaptive control.

Reference:

1. Kuzmenko D, Tsepa O, Kurbis AG, Mihailidis A, and Laschowski B. (2023). Efficient visual perception of human-robot walking environments using semi-supervised learning. IEEE International Conference on Intelligent Robots and Systems (IROS). DOI: 10.1109/IROS55552.2023.10341654.

2. Kurbis AG, Mihailidis A, and Laschowski B. (2024). Development and mobile deployment of a stair recognition system for human-robot locomotion. IEEE Transactions on Medical Robotics and Bionics. DOI: 10.1109/TMRB.2024.3349602.

3. Ivanyuk-Skulskiy B, Kurbis AG, Mihailidis A, and Laschowski B. (2024). Sequential image classification of human-robot walking environments using temporal neural networks. IEEE International Conference on Biomedical Robotics and Biomechatronics (BioRob). DOI: 10.1109/BioRob60516.2024.10719798

*Details are provided in the ReadMe file. TFRecords format is available upon request. Email Dr. Brokoslaw Laschowski (brokoslaw.laschowski@utoronto.ca) for additional questions and/or technical assistance.

Documentation

| Attachment | Size |

|---|---|

| 280.58 KB |

Comments

I need this dataset for academic reasons.