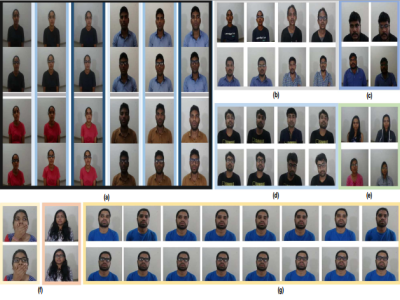

A human video database for facial feature detection under spectacles with varying alertness levels

- Citation Author(s):

- Submitted by:

- lazarus mayaluri

- Last updated:

- DOI:

- 10.21227/p3mw-q429

- Data Format:

- Links:

871 views

871 views

- Categories:

- Keywords:

Abstract

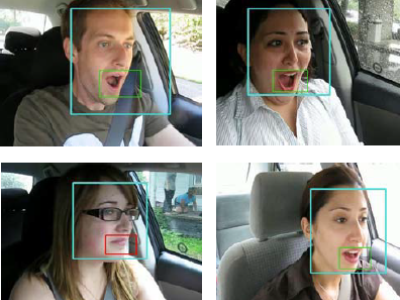

Pressing demand of workload along with social media interaction leads to diminished alertness during work hours. Researchers attempted to measure alertness level from various cues like EEG, EOG, Video-based eye movement analysis, etc. Among these, video-based eyelid and iris motion tracking gained much attention in recent years. However, most of these implementations are tested on video data of subjects without spectacles. These videos do not pose a challenge for eye detection and tracking. In this work, we have designed an experiment to yield a video database of 58 human subjects wearing spectacles and are at different levels of alertness. Along with spectacles, we introduced variation in session, recording frame rate (fps), illumination, and time of the experiment. We carried out analysis to detect the reliableness of facial and ocular features like yawning and eyeblinks in the context of alertness level detection capability. Also, we observe the influence of spectacles on ocular feature detection performance under spectacles and propose a simple preprocessing step to alleviate the specular reflection problem. Extensive experiments on real-world images demonstrate that our approach achieves desirable reflection suppression results within minimum execution time compared to the state of the art.

Instructions:

Information on meta-data

This metadata folder includes 5 subfolders:

1. Blink

2. Headpose detection

3. Landmark detection

4. TASK 1 and 2 stimuli

5. Video data

1. Blink: This subfolder includes the ground truth (manual) blink count values for videos of 25 subjects at different illumination, alertness level and spectacles conditions recorded at 30 frame per second (FPS).

2. Headpose detection: It includes translation and rotation vector of each frame of 120 videos. The videos are selected from 10 subject’s video including all variants.

The code used for calculation of vectors is adopted from GitHub repository of Y. Guobing. It can be accessed via following link:

https://github.com/yinguobing/head-pose-estimation (accessed on date 6th April 2018.)

3. Landmark detection: It includes manually annotated 5 land mark points (Left and right corner of both the eyes and one center point of right iris) for approximately ~ 800 frames. It also includes landmark points detected by using dlib’s facial land mark detector (available at: A. Rosebrock, https://www.pyimagesearch.com/2018/04/02/faster-facial-landmark detector-with dlib/#comment-455221Adrian Rosebrock accessed on date 6th April 2018.) And CNN based facial landmark detector (available at: Y. Guobing https://github.com/yinguobing/cnn-facial-landmark accessed on date 6th April 2018 ).

4. TASK 1 and 2 stimuli: Power point presentation used for task 1 is given, also the MATLAB GUI used for task 2 with the documentation on how to use, is included in this subfolder.

5. Video data: Thissubfolder includes video clips of 27 subjects with all the variants considered in database creation.

Metadata nomenclature format: "SXX_L_xxxF_I_S"

where SXX = Subject ID Number: S01-S58

L = Alert condition ='A', Drowsy condition ='D'

xxxF = FPS: 030, 060, 120

I = Illumination level: High illumination='H', Medium illumination='M', Low

illumination='L'

S = Spectacles: Without spectacles='0', With spectacles='1'

Database is available to interested parties upon request to the authors.

Sample videos can be downloaded from this google drive:

https://drive.google.com/file/d/14euhp7fn7dMbBeiErellJA71lU2HnTzA/view?usp=sharing