Data Fusion Contest 2019 (DFC2019)

- Citation Author(s):

- Submitted by:

- Bertrand Le Saux

- Last updated:

- DOI:

- 10.21227/c6tm-vw12

- Data Format:

- Research Article Link:

- Links:

25509 views

25509 views

- Categories:

- Keywords:

Abstract

The Contest: Goals and Organisation

The 2019 Data Fusion Contest, organized by the Image Analysis and Data Fusion Technical Committee (IADF TC) of the IEEE Geoscience and Remote Sensing Society (GRSS), the Johns Hopkins University (JHU), and the Intelligence Advanced Research Projects Activity (IARPA), aimed to promote research in semantic 3D reconstruction and stereo using machine intelligence and deep learning applied to satellite images.

The global objective was to reconstruct both a 3D geometric model and a segmentation of semantic classes for an urban scene. Incidental satellite images, airborne lidar data, and semantic labels were provided to the community. The 2019 Data Fusion Contest consisted of four parallel and independent competitions, corresponding to four diverse tasks:

- Track 1: Single-view Semantic 3D Challenge

- Track 2: Pairwise Semantic Stereo Challenge

- Track 3: Multi-view Semantic Stereo Challenge

- Track 4: 3D Point Cloud Classification Challenge

The Data

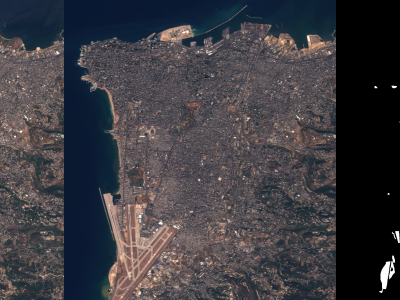

In the contest, we provide Urban Semantic 3D (US3D) data, a large-scale public dataset including multi-view, multi-band satellite images and ground truth geometric and semantic labels for two large cities [1]. The US3D dataset includes incidental satellite images, airborne lidar, and semantic labels covering approximately 100 square kilometers over Jacksonville, Florida and Omaha, Nebraska, United States. For the contest, we provide train and test datasets for each challenge track including approximately twenty percent of the US3D data.

- Multidate Satellite Images: For the contest, WorldView-3 panchromatic and 8-band visible and near infrared (VNIR) images are provided courtesy of DigitalGlobe. Source data consists of 26 images collected between 2014 and 2016 over Jacksonville, Florida, and 43 images collected between 2014 and 2015 over Omaha, Nebraska, United States. Ground sampling distance (GSD) is approximately 35 cm and 1.3 m for panchromatic and VNIR images, respectively. VNIR images are all pan-sharpened. Satellite images are provided in geographically non-overlapping tiles, where airborne lidar data and semantic labels are projected into the same plane. Unrectified images (for Tracks 1 and 3) and epipolar rectified image pairs (for Track 2) are provided as TIFF files.

- Airborne LiDAR data are used to provide ground-truth geometry. The aggregate nominal pulse spacing (ANPS) is approximately 80 cm. Point clouds are provided in ASCII text files with format {x, y, z, intensity, return number} for Track 4. Training data derived from lidar includes ground truth above ground level (AGL) height images for Track 1, pairwise disparity images for Track 2, and digital surface models (DSM) for Track 3, all provided as TIFF files.

- Semantic labels are provided as TIFF files for each geographic tile in Tracks 1-3 and ASCII text files in Track 4. Semantic classes in the contest include buildings, elevated roads and bridges, high vegetation, ground, water, etc.

We provide all the above datasets for the training regions only. For the validation and test regions, only satellite images are provided in Tracks 1-3 and only lidar point clouds are provided in Track 4. The ground truth for the validation and test sets remains undisclosed and will be used for evaluation of the results. The training and test sets for the contest include dozens of images for each geographic 500m x 500m tile: 111 tiles for the training set; 10 tiles for the validation set; 10 tiles for the test set.

Challenge tracks

Track 1: Single-view semantic 3D

For each geographic tile, an unrectified single-view image is provided. The objective is to predict semantic labels and normalized DSM (nDSM) above-ground heights. Participants of Track 1 are intended to submit 2D semantic maps and AGL maps in raster format (similar to the tif file of the training set). Performance is assessed using the pixel-wise mean Intersection over Union (mIoU) for which true positives must have both the correct semantic label and height error less than a threshold of 1 meter. We call this metric mIoU-3.

https://competitions.codalab.org/competitions/20208

Track 2: Pairwise semantic stereo

For each geographic tile, a pair of epipolar rectified images is given. The objective is to predict semantic labels and stereo disparities. Participants of Track 1 are intended to submit 2D semantic maps and disparity maps in raster format (similar to the tif file of the training set). Performance is assessed using mIoU-3 with a threshold of 3 pixels for disparity values.

https://competitions.codalab.org/competitions/20212

Track 3: Multi-view semantic stereo

Given multi-view images for each geographic tile, the objective is to predict semantic labels and a DSM. Unrectified images are provided with RPC metadata already adjusted using the lidar so that registration is not required in evaluation and so that solutions can focus on methods for image selection, correspondence, semantic labeling, and multi-view fusion. Since this track relies on RPC metadata which may not be familiar to everyone, the baseline algorithm provided includes simple python code to manipulate RPC for epipolar rectification and triangulation. Participants of Track 3 are intended to submit 2D semantic maps and DSMs in raster format (similar to the tif file of the training set). Performance is assessed using mIoU-3 with a threshold of 1 meter for the DSM Z values.

https://competitions.codalab.org/competitions/20216

Track 4: 3D point cloud classification

For each geographic tile, lidar point cloud data is provided. The objective is to predict a semantic label for each 3D point. Participants of Track 4 are intended to submit 3D semantic predictions in ASCII text files (similar to the text files of the training set). Performance is assessed using mIoU.

https://competitions.codalab.org/competitions/20217

Baseline methods

Baseline solutions are provided for each challenge track to help participants get started quickly and better understand the data and its intended use. Deep learning models for image semantic segmentation (for Tracks 1, 2, and 3), point cloud semantic segmentation (for Track 4), single-image height prediction (for Track 1), and pairwise stereo disparity estimation (for Tracks 2 and 3) are provided. Each of these was implemented in Keras with TensorFlow. The models, python code to train them, and python code for inference are provided. A baseline semantic MVS solution (for Track 3) implemented in python is also provided to clearly demonstrate the use of RPC metadata for basic tasks such as epipolar rectification and triangulation.

https://github.com/pubgeo/dfc2019

Acknowledgements

The IADF TC chairs would like to thank IARPA and the Johns Hopkins University Applied Physics Laboratory for providing the data and the IEEE GRSS for continuously supporting the annual Data Fusion Contest through funding and resources.

Contest Terms and Conditions

The data are provided for the purpose of participation in the 2019

Data Fusion Contest and remain available for further research efforts provided that subsequent terms of use are respected. Participants acknowledge that they have read and agree to the following Contest Terms and Conditions:

- The owners of the data and of the copyright on the data are DigitalGlobe, IARPA and Johns Hopkins University.

- Any dissemination or distribution of the data packages by any registered user is strictly forbidden.

- The data can be used in scientific publications subject to approval

by the IEEE GRSS Image Analysis and Data Fusion Technical Committee and

by the data owners on a case-by- case basis. To submit a scientific

publication for approval, the publication shall be sent as an attachment

to an e-mail addressed to iadf_chairs@grss-ieee.org. - In any scientific publication using the data, the data shall be

identified as “grss_dfc_2019” and shall be referenced as follows: “[REF.

NO.] 2019 IEEE GRSS Data Fusion Contest. Online:

http://www.grss-ieee.org/community/technical-committees/data-fusion”. - Any scientific publication using the data shall include a section

“Acknowledgement”. This section shall include the following sentence:

“The authors would like to thank the Johns Hopkins University Applied Physics Laboratory and IARPA for providing the data used in this study, and the IEEE GRSS Image Analysis and Data Fusion Technical Committee for organizing the Data Fusion Contest. - Any scientific publication using the data shall refer to the following papers:

- [Bosch et al., 2019] Bosch, M. ; Foster, G. ; Christie, G. ; Wang, S. ; Hager, G.D. ; Brown, M. : Semantic Stereo for Incidental Satellite Images. Proc. of Winter Conf. on Applications of Computer Vision, 2019.

- [Le Saux et al., 2019] Le Saux, B. ; Yokoya, N. ; Hänsch, R. ; Brown, M. ; Hager, G.D. ; Kim, H. : 2019 Data Fusion Contest [Technical Committees], IEEE Geoscience and Remote Sensing Magazine, 7 (1), March 2019

Instructions:

- Participants to the benchmark are intended to submit:

- 2D semantic maps and nDSM/disparity/DSM maps in raster format (similar to the tif file of the training set) for Tracks 1, 2, and 3

- 3D semantic predictions in ASCII text files (similar to the text file of the training set) for Track 4

These results will be submitted to the Codalab competition websites for evaluation:

- Ranking among the participants will be based on:

- mIoU-3 for Tracks 1, 2, and 3

- mIoU for Track 4

Dataset Files

- Track 4 / Validation data / Reference (Size: 930.68 KB)

- Track 4 / Validation data (Size: 64.52 MB)

- Track 4 / Training data / Reference (Size: 8.16 MB)

- Track 4 / Training data (Size: 600.81 MB)

- Track 4 / Test data (Size: 63.13 MB)

- Track 3 / Metadata (Size: 138.5 KB)

- Track 3 / Training data / Multispectral images 1/10 (Size: 4.89 GB)

- Track 3 / Training data / Multispectral images 2/10 (Size: 11.43 GB)

- Track 3 / Training data / Multispectral images 3/10 (Size: 9.8 GB)

- Track 3 / Training data / Multispectral images 4/10 (Size: 5.2 GB)

- Track 3 / Training data / Multispectral images 5/10 (Size: 10.4 GB)

- Track 3 / Training data / Multispectral images 6/10 (Size: 3.63 GB)

- Track 3 / Training data / Multispectral images 7/10 (Size: 9.76 GB)

- Track 3 / Training data / Multispectral images 8/10 (Size: 10 GB)

- Track 3 / Training data / Multispectral images 9/10 (Size: 8.69 GB)

- Track 3 / Training data / Multispectral images 10/10 (Size: 9.03 GB)

- Track 3 / Training data / RGB images 1/2 (Size: 7.6 GB)

- Track 3 / Training data / RGB images 2/2 (Size: 12.49 GB)

- Track 3 / Training data / Reference (Size: 37.46 MB)

- Track 3 / Validation data (Size: 11.22 GB)

- Track 2 / Training data / Multispectral images 1/11 (Size: 4.17 GB)

- Track 2 / Training data / Multispectral images 2/11 (Size: 12.07 GB)

- Track 2 / Training data / Multispectral images 3/11 (Size: 10.23 GB)

- Track 2 / Training data / Multispectral images 4/11 (Size: 10.54 GB)

- Track 2 / Training data / Multispectral images 5/11 (Size: 12.17 GB)

- Track 2 / Training data / Multispectral images 6/11 (Size: 7.34 GB)

- Track 2 / Training data / Multispectral images 7/11 (Size: 7.08 GB)

- Track 2 / Training data / Multispectral images 8/11 (Size: 7.14 GB)

- Track 2 / Training data / Multispectral images 9/11 (Size: 7.64 GB)

- Track 2 / Training data / Multispectral images 10/11 (Size: 6.37 GB)

- Track 2 / Training data / Multispectral images 11/11 (Size: 9.18 GB)

- Track 2 / Training data / RGB images 1/4 (Size: 12.52 GB)

- Track 2 / Training data / RGB images 2/4 (Size: 3.42 GB)

- Track 2 / Training data / RGB images 3/4 (Size: 10.4 GB)

- Track 2 / Training data / RGB images 4/4 (Size: 4.98 GB)

- Track 2 / Training data / Reference (Size: 1.68 GB)

- Track 2 / Validation data (Size: 1.34 GB)

- Track 1 / Training data / Multispectral images 1/3 (Size: 11.02 GB)

- Track 1 / Training data / Multispectral images 2/3 (Size: 12.39 GB)

- Track 1 / Training data / Multispectral images 3/3 (Size: 6.5 GB)

- Track 1 / Training data / RGB images 1/1 (Size: 6.59 GB)

- Track 1 / Validation data (Size: 682.76 MB)

- Track 1 / Training data / Reference (Size: 8.17 GB)