Open Access Entries from this Author

Human activity recognition, which involves recognizing human activities from sensor data, has drawn a lot of interest from researchers and practitioners as a result of the advent of smart homes, smart cities, and smart systems. Existing studies on activity recognition mostly concentrate on coarse-grained activities like walking and jumping, while fine-grained activities like eating and drinking are understudied because it is more difficult to recognize fine-grained activities than coarse-grained ones.

- Categories:

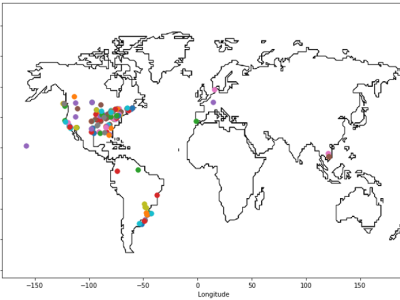

This is a dataset of client-server Round Trip Time delays of an actual cloud gaming tournament run on the infrastructure of the cloud gaming company Swarmio Inc. The dataset can be used for designing algorithms and tuning models for user-server allocation and server selection. To collect the dataset, tournament players were connected to Swarmio servers and delay measurements were taken in real time and actual networking conditions.

- Categories:

Category

EEG brain recordings of ADHD and non-ADHD individuals during gameplay of a brain controlled game, recorded with an EMOTIV EEG headset. It can be used to design and test methods to detect individuals with ADHD.

- Categories:

Category

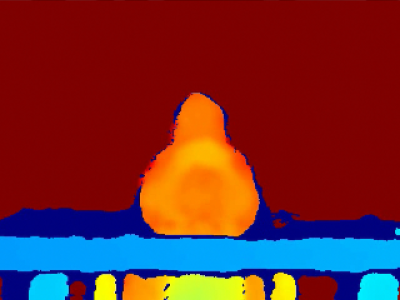

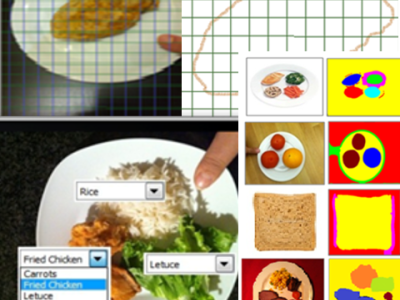

Images of various foods, taken with different cameras and different lighting conditions. Images can be used to design and test Computer Vision techniques that can recognize foods and estimate their calories and nutrition.

- Categories:

Category

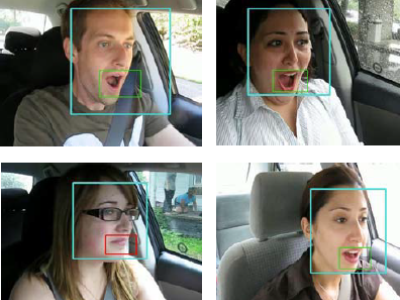

A dataset of videos, recorded by an in-car camera, of drivers in an actual car with various facial characteristics (male and female, with and without glasses/sunglasses, different ethnicities) talking, singing, being silent, and yawning. It can be used primarily to develop and test algorithms and models for yawning detection, but also recognition and tracking of face and mouth. The videos are taken in natural and varying illumination conditions. The videos come in two sets, as described next:

- Categories:

Category

This is an eye tracking dataset of 84 computer game players who played the side-scrolling cloud game Somi. The game was streamed in the form of video from the cloud to the player. The dataset consists of 135 raw videos (YUV) at 720p and 30 fps with eye tracking data for both eyes (left and right). Male and female players were asked to play the game in front of a remote eye-tracking device. For each player, we recorded gaze points, video frames of the gameplay, and mouse and keyboard commands.

- Categories:

Category