Artificial Intelligence

This dataset consists of meteorological and environmental data collected in Riyadh, Saudi Arabia, over multiple years. The variables include solar radiation, temperature (both maximum and minimum in Celsius and Fahrenheit), precipitation, vapor pressure, and snow water equivalent, among others. The data spans from 2010 to the present, providing insights into solar radiation patterns, daily temperature fluctuations, and weather-related factors that can impact solar power generation. Specifically, the dataset contains the following columns:

- Categories:

69 Views

69 Views

This study explores the relationship between social media sentiment and stock market movements using a dataset of tweets related to various publicly traded companies. The dataset comprises time-stamped tweets containing company-specific information, stock ticker symbols, and company names. By leveraging natural language processing (NLP) techniques, we analyze the sentiment of tweets to determine their impact on stock price fluctuations. This research aims to develop predictive models that incorporate tweet sentiment and frequency as features to forecast stock price movements.

- Categories:

53 Views

53 Views

Ensemble clustering, which integrates multiple base clusterings to enhance robustness and accuracy, is commonly evaluated on over 10 benchmark datasets. These include 4 synthetic datasets (e.g., 3MC,atom,Tetra and Flame) designed to test algorithms on nonlinear separability and density variations.

- Categories:

14 Views

14 Views

SNMDat2.0 is a comprehensive multimodal dataset, expanded from the unimodal TwiBot-20, designed for Twitter social bot detection. Specifically, we add 274587 profile images and profile background images, 86498 tweet images and 49549 tweet videos based on the original 229580 twitter users, 227979 follow relationships and 33488192 tweet text.

- Categories:

16 Views

16 Views

The painting style data sets were constructed by searching, selecting and collecting the public painting works on the internet, treating the painting style and artists' names as keywords. The data set collected 750 painting works in all, including five kinds of styles. They were receptively Cubism, Op Art, Color Field Painting, Post Impressionism and Rococo.

- Categories:

13 Views

13 Views

Amid global climate change, rising atmospheric methane (CH4) concentrations significantly influence the climate system, contributing to temperature increases and atmospheric chemistry changes. Accurate monitoring of these concentrations is essential to support global methane emission reduction goals, such as those outlined in the Global Methane Pledge targeting a 30% reduction by 2030. Satellite remote sensing, offering high precision and extensive spatial coverage, has become a critical tool for measuring large-scale atmospheric methane concentrations.

- Categories:

18 Views

18 Views

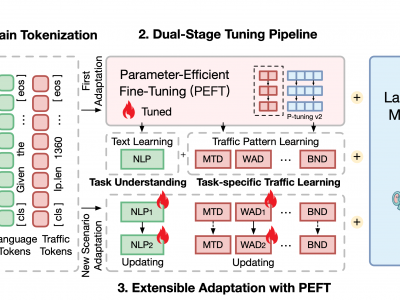

Computational experiments within metaverse service ecosystems enable the identification of social risks and governance crises, and the optimization of governance strategies through counterfactual inference to dynamically guide real-world service ecosystem operations. The advent of Large Language Models (LLMs) has empowered LLM-based agents to function as autonomous service entities capable of executing diverse service operations within metaverse ecosystems, thereby facilitating the governance of metaverse service ecosystem with computational experiments.

- Categories:

18 Views

18 Views

Laboratory experiments are fundamental to science education, yet resource constraints often limit students’ access to hands-on learning experiences. While object detection technology offers promising solutions for automated material identification and assistance, existing datasets like CABD (21 classes) and Chemical Experiment Image Dataset (7 classes) are limited in scope. We present two comprehensive datasets for laboratory material detection: a Chemistry dataset comprising 1,191 images across 60 classes and a Physics dataset containing 1,749 images across 76 classes.

- Categories:

21 Views

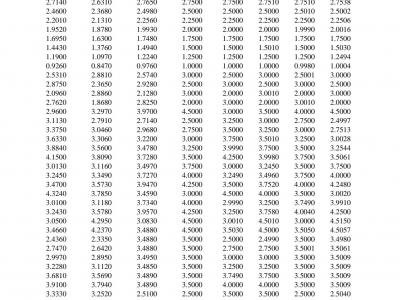

21 ViewsThis is the sample data from a switched-capacitor single-input multiple-output (SC-SIMO) converter, which can be utilized to train an artificial neural network (ANN) model. In the dataset, the current references IL3ref, IL2ref, IL1ref, and IL0ref are recorded and applied to the switched-capacitor single-input multiple-output (SC-SIMO) converter, and the introduced inductor currents IL3, IL2, IL1, and IL0 are recorded. During the ANN training process, the inductor currents are considered as the inductor currents references, which are the four inputs of the ANN model.

- Categories:

193 Views

193 Views