Mobile EEG recordings of musical (jazz) improvisation

- Citation Author(s):

- Submitted by:

- Mauricio Ramirez Moreno

- Last updated:

- DOI:

- 10.21227/hx73-7159

- Data Format:

- Links:

416 views

416 views

- Categories:

- Keywords:

Abstract

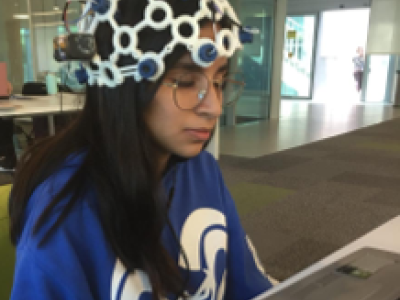

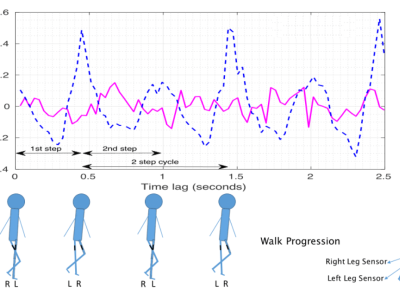

The use of modern Mobile Brain-Body imaging techniques, combined with hyperscanning (simultaneous and synchronous recording of brain activity of multiple participants) has allowed researchers to explore a broad range of different types of social interactions from the neuroengineering perspective. In specific, this approach allows to study such type of interactions under an ecologically valid approach. Among them, the study of collaborative, improvised musical creation offers an interesting window to understand the dynamics of shared cognitive processes for a specific goal, and can provide insight on behaviors such as anticipation, competition/collaboration, as well as brain-to-brain communication. In this database, electroencephalography (EEG) data is provided from three male professional musicians who performed a collaborative, improvised jazz performance for three 15-minute trials, in front of a live-audience. In each trial, one single musician started performing for five minutes, when a second musician joined; and after five minutes of this two-musician improvisation, the third musician joined the improvisation. The order in which the joined the performance was different at each trial. The database contains EEG data from 64 electrodes (from each musician), four of them are electrooculography (EOG), impedance values before and after the complete performance, sound and video recordings from all performances, as well as annotations to synchronize sound/video and EEG data.

Instructions:

EXPERIMENT INFORMATION

The experimental methods were approved by the Institutional Review Board of the University of Houston, and are in accordance with the Declaration of Helsinki. All participants provided written informed consent, including agreement for publication in online open-access publication of information obtained during the experiments such as data, images, audio, and video. Three male healthy adults (P1, P2 and P3) volunteered for this study. Musicians P2 and P3 received (formal) musical instruction for 12 and 6 years respectively, and P1 (informal) for 6 years. To the date of the experiments, P1, P2 and P3 had 31, 38, and 26 years of experience performing music respectively. The musicians performed jazz improvisation in a public event at the Student Center of the University of Houston while wearing Mobile Brain-Body Imaging technology. Musicians P1 and P2 have a jazz musical background, whereas P3 had a ’classical music’ education. Musician P1 played the drums, musician P2 played the saxophone, and P3 played using a soprano saxophone.

DATA SUMMARY

The EEG data are in .MAT format and are archived in folders and subfolders, divided in two recording blocks for three participants. The two blocks include EEG and EOG signals from three musicians, performing three 15-minute improvisations (Trials). Data transmission was interrupted from 4:25 - 5:25 of Trial 3 due to a loss in connection, which happened between the end of Block 1 and the start of Block 2. All EEG files are named with the following naming convention:

Block(block #)_P(participant #)_.mat,

specifying the recording block number (1, 2) and the participant number (1, 2, 3).

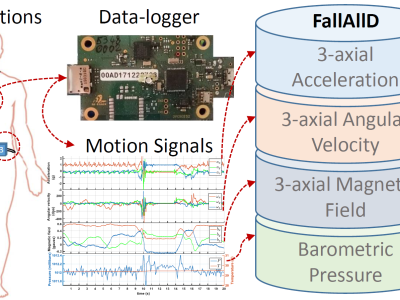

Each of the EEG files contains a structure with three fields (variable name: EEG):

- EEG.data: M×N matrix containing the raw EEG data. The rows are the M channels corresponding to the channel locations and the columns are the N sample points. EOG data is provided in the same structure for four channels in rows 17 (right temple), 22 (left temple), 28 (above the right eye) and 32 (below the right eye)

- EEG.srate: The sampling rate of the system given in samples/second (Hz)

- EEG.chanlocs: M dimensional structure where each dimension corresponds to the location information for each of the M channels

- EEG.gender: Gender of subject (M: male; F: female)

- EEG.events: Provides annotations about the performances such as times (in samples) of Trials, Eyes Open and Eyes Closed sections.

Additional data includes:

- Audio: An mp3 file (ZOOM0001.mp3) contains a sound recording from the three 15-minute Trials.

- Videos: Two video recordings (Blaffer_Floor_1210.mp4 and Blaffer_Floor_1221.mp4) are included in the database.

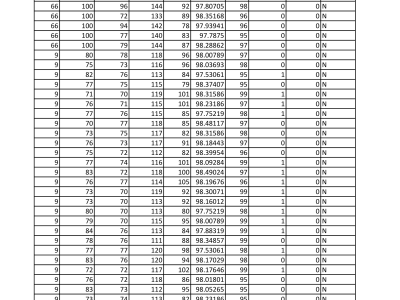

- Impedances: An Excel file (Impedances.xlsx) contains Impedance values for all EEG electrodes, before and after the recordings, for all participants in the experiments.

- Additional Notes: An Excel file (Performance Notes.xlsx) includes notes made by three independent raters (music experts) about each of the performances. The table includes times which can be used to synchronize EEG data and sound recordings, together with annotations from EEG.events.

ACKNOWLEDGMENT

Authors would like to thank Woody Witt, Guillermo “Memo” Reza, and Dan Gelok for their contributions to the study providing the data.

CONFLICT OF INTEREST

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Dataset Files

- Impedances.xlsx (Size: 35.74 KB)

- Performance Notes.xlsx (Size: 21.11 KB)

- ZOOM0001.MP3 (Size: 29.67 MB)

- Blaffer_Floor_1210.mp4 (Size: 16.31 MB)

- Blaffer_Floor_1221.mp4 (Size: 103.51 MB)

- Block1_P1.mat (Size: 529.7 MB)

- Block1_P2.mat (Size: 473.07 MB)

- Block1_P3.mat (Size: 475 MB)

- Block2_P1.mat (Size: 366.32 MB)

- Block2_P2.mat (Size: 387.45 MB)

- Block2_P3.mat (Size: 362.18 MB)