GeoCaption-12K

- Citation Author(s):

- Submitted by:

- Xing Zi

- Last updated:

- DOI:

- 10.21227/6qf2-zd04

- Data Format:

- Research Article Link:

- Links:

22 views

22 views

- Categories:

- Keywords:

Abstract

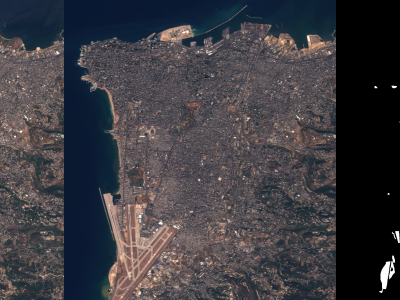

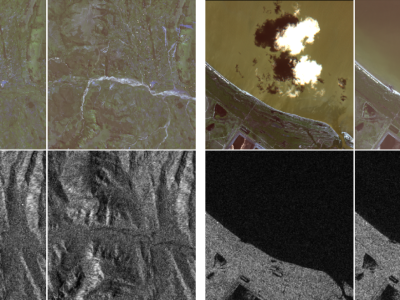

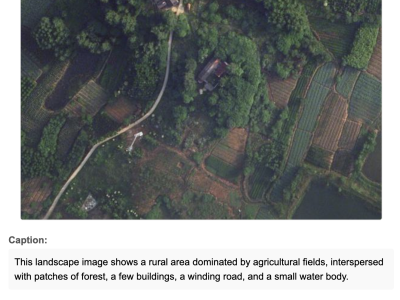

The scarcity of multimodal datasets in remote sensing, particularly those combining high-resolution imagery with descriptive textual annotations, limits advancements in context-aware analysis. To address this, we introduce a novel dataset comprising 12,473 aerial and satellite images sourced from established benchmarks (RSSCN7, DLRSD, iSAID, LoveDA, and WHU), enriched with automatically generated pseudo-captions and semantic tags. Using a two-step pipeline, we first construct structured prompts from polygon-based annotations and employ GPT-4O to generate detailed captions articulating spatial layouts and object relationships, resulting in captions averaging 181.94 words with a vocabulary of 3,961 unique words. These captions are filtered for noise reduction and paired with semantic tags extracted via named entity recognition and part-of-speech tagging, providing domain-specific cues (e.g., “building,” “river,” “runway”). This dataset, with its comprehensive visual-textual annotations, significantly reduces manual annotation costs while enabling advanced multimodal remote sensing tasks, such as scene understanding and spatially informed visual representation learning across diverse urban, rural, and natural environments. The dataset is publicly available to foster research in remote sensing and multimodal learning.

Instructions:

For Easy Download, Please use Google Drive:

https://drive.google.com/file/d/1T82VRN_5WIHOtZcPOHY3z9gW7P6XtqG1/view?usp=sharing