Deepblueberry: Quantification of Blueberries in the Wild Using Instance Segmentation

- Citation Author(s):

-

Sebastian Gonzalez (Universidad Andres Bello)Claudia Arellano (Universidad Tecnologica de Chile )

- Submitted by:

- JUAN TAPIA

- Last updated:

- DOI:

- 10.21227/40k9-hn32

- Data Format:

- Links:

2593 views

2593 views

- Categories:

- Keywords:

Abstract

Deepblueberry: Quantification of Blueberries in the Wild Using Instance Segmentation Dataset.

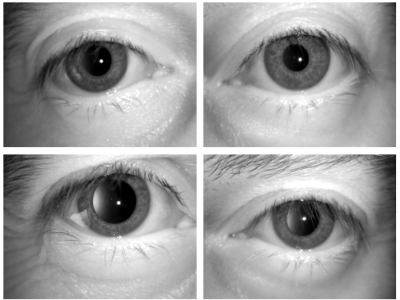

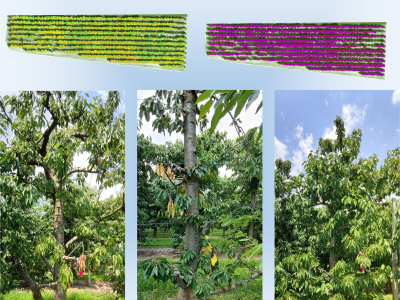

An accurate and reliable image-based quantification system for blueberries may be useful for the automation of harvest management. It may also serve as the basis for controlling robotic harvesting systems. Quantification of blueberries from images is a challenging task due to occlusions, differences in size, illumination conditions and the irregular amount of blueberries that can be present in an image. This paper proposes the quantification per image and per batch of blueberries in the wild, using high definition images captured using a mobile device. In order to quantify the number of berries per image, a network based on Mask R-CNN for object detection and instance segmentation was proposed. Several backbones such as ResNet101, ResNet50 and MobileNetV1 were tested. The performance of the algorithm was evaluated using the Intersection over Union Error (IoU) and the competitive mean Average Precision (mAP) per image and per batch. The best detection result was obtained with the ResNet50 backbone achieving a mIoU score of 0.595 and mAP scores of 0.759 and 0.724 respectively (for IoU thresholds 0.5 and 0.7). For instance segmentation, the best results obtained were 0.726 for the mIoU metric and 0.909 and 0.774 for the mAP metric using thresholds of 0.5 and 0.7 respectively.

Instructions:

Deep_BlueBerry_Dataset:

This dataset is only for research purposes.

The database folder contains both databases used for instance segmentation and object detection tasks. The instance segmentation database uses the VGG Image Annotator (VIA) tool, which can be found here: http://www.robots.ox.ac.uk/~vgg/software/via/

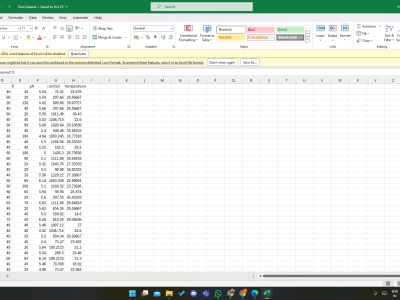

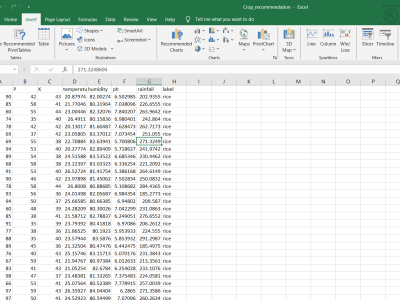

The object detection database is composed as follows:

- Each folder contains images and the annotated ROIs in the form of coordinates in a .json file.

- The first folder contains very high-resolution images (3264 x 2448 pixels, mostly). The rest of the folders contain high-resolution images (1920 x 1080 pixels), this is individual frames taken from video files. Video files are also included.

- The database (berries.json) format is a dictionary in which the keys are the images filenames and the value for each key a list with ROIs.

- Each list element contains a dictionary with four keys: x, y, w, and h. x and y represent the upper left point in the ROI, while w and h represent the bottom right point in the ROI, forming the bounding box. The values of the keys are the coordinates. Example:

{

"IMG_1004.jpg": [

{

"x": 1845,

"y": 1496,

"w": 2044,

"h": 1704

},

{

"x": 2225,

"y": 498,

"w": 2348,

"h": 612

},

{

"x": 2112,

"y": 444,

"w": 2234,

"h": 566

}

],

"IMG_1117.jpg": [

{

"x": 493,

"y": 987,

"w": 640,

"h": 1138

},

{

"x": 629,

"y": 997,

"w": 781,

"h": 1178

}

]

}

Please any further question to jtapiafarias@ing.uchile.cl

Please remember cited correctly the paper:DeepBlueBerry: Quantification of Blueberries in the Wild Using Instance Segmentation"

bibtex:

@ARTICLE{8787818,

author={S. {Gonzalez} and C. {Arellano} and J. E. {Tapia}},

journal={IEEE Access},

title={Deepblueberry: Quantification of Blueberries in the Wild Using Instance Segmentation},

year={2019},

volume={7},

number={},

pages={105776-105788},

keywords={agriculture;convolutional neural nets;food products;image segmentation;mobile computing;mobile robots;object detection;instance segmentation;blueberries;harvest management;robotic harvesting systems;high definition images;image-based quantification system;images capture;mobile device;Mask R-CNN;object detection;intersection over union error;IoU;mean average precision;mAP metric;Image segmentation;Databases;Image color analysis;Object detection;Deep learning;Feature extraction;Measurement;Blueberries;deep learning;quantification;segmentation},

doi={10.1109/ACCESS.2019.2933062},

ISSN={},

month={},}

Only for research and academic used